Supervised | Unsupervised Learning : Detecting and Evaluating Anomalies in Categorical Data Under Supervised and Unsupervised Settings¶

- 1. Table of Contents

- 1.1 Data Background

- 1.2 Data Description

- 1.3 Data Quality Assessment

- 1.4 Data Preprocessing

- 1.5 Data Exploration

- 1.6 Model Hyperparameter Tuning and Evaluation Preparation

- 1.7 Model Development With Synthetic Ground Truth Labels

- 1.8 Model Development Without Ground Truth Labels

- 1.9 Consolidated Findings

- 2. Summary

- 3. References

1. Table of Contents ¶

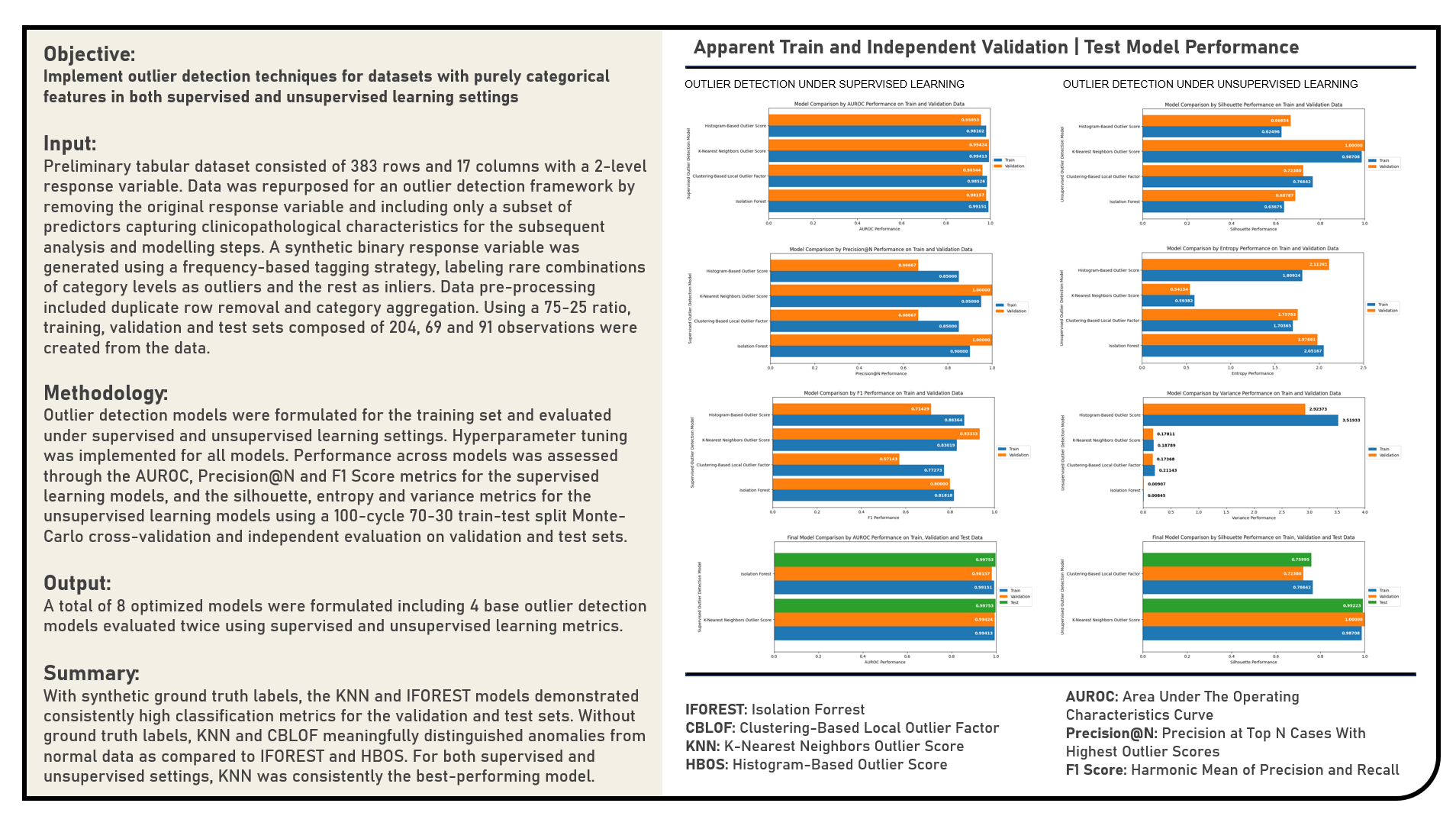

This project explores various Outlier Detection techniques specifically tailored for datasets with purely categorical features, utilizing multiple tools and libraries available in Python. The analysis was carried out in two distinct experimental settings: a Supervised Setting where synthetic outlier ground truth labels are available, and an Unsupervised Setting where no labels are assumed. The methods applied in both settings span a diverse range of non-deep learning techniques, including classical statistical, distance-based, and pattern-based models including: Isolation Forest, Clustering-Based Local Outlier Factor (CBLOF), K-Nearest Neighbors (KNN) and Histogram-Based Outlier Score (HBOS). In the supervised setting, outlier detection methods were evaluated using traditional classification metrics such as the Area Under the Receiver Operating Characteristic Curve(AUROC), F1-Score, and Precision@N to assess their ability to distinguish true outliers from normal observations. In contrast, the unsupervised setting employed label-agnostic evaluation strategies, leveraging internal and score-based metrics such as Silhouette Score on Outlier Scores, Outlier Score Entropy and Score Variance. These were complemented by Principal Component Analysis (PCA) and Uniform Manifold Approximation and Projection (UMAP) Visualizations to assess the score separability and clustering quality across methods. This dual-framework approach allows for a comprehensive understanding of how each method performs under both label-available and label-free conditions, providing a rigorous basis for outlier detection in categorical data contexts. All results were consolidated in a Summary presented at the end of the document.

Outlier Detection in datasets composed exclusively of categorical variables poses unique challenges because most traditional techniques rely on notions like distance, density, or variance—concepts naturally defined in numerical spaces. In contrast, categorical data lacks inherent numeric relationships or ordering, making it non-trivial to apply distance-based or distribution-based methods directly. However, there are still structured and principled approaches to identify anomalies in such datasets. At its core, an outlier in categorical data is an observation that exhibits a rare or unexpected combination of attribute levels. While each individual variable may contain common categories, their joint configuration may be highly improbable, making the observation an outlier even if no single variable is unusual in isolation. The most basic yet powerful approach begins by examining frequency distributions by way of tallying the count of each unique record configuration and flagging those that occur rarely or not at all in the rest of the data as potential anomalies. To go a step further, one can estimate probabilities of individual variable levels and their joint probabilities across combinations of features. This idea underlies models where the joint likelihood of feature values is used as an outlier score. However, due to the curse of dimensionality — even with a moderate number of variables — the number of possible category combinations grows exponentially, making pure joint frequency estimation unreliable in high dimensions. This motivates the use of dimensionality reduction techniques which maps categorical data into a continuous latent space. In this lower-dimensional space, standard outlier detection techniques can be applied more reliably. In other cases, categorical variables are transformed using encoding schemes to enable the use of well-established numeric algorithms. For instance, one-hot encoding represents each level of a categorical variable as a binary vector, while ordinal encoding assigns arbitrary numeric values to categories. More advanced encodings like entity embeddings can preserve semantic similarity between categories learned via neural networks. Once encoded, various outlier detection methods can be applied, provided the encoding faithfully retains category relationships. An alternative family of methods relies on clustering to group similar categorical records. Outliers are identified either as records not belonging to any dense cluster, or as records that lie far from their cluster's central profile. Finally, rule-based outlier detection extract conditional patterns and flag records that violate these frequent rules. In conclusion, while outlier detection in categorical data is fundamentally different from numeric data due to the lack of inherent distances or ordering, a combination of probability modeling, encoding and transformation, rule learning, and clustering can be used to detect anomalies effectively. The choice of method depends on the structure of the data, the cardinality of features, and whether interpretability or predictive performance is prioritized.

Outlier Detection In Categorical Data With Ground Truth Labels becomes a supervised classification task. In this setting, the goal is not just to detect anomalies, but to train models that can predict outlier status reliably, based on known labeled examples. This scenario is relatively rare in real-world applications, but it allows for robust evaluation and algorithm benchmarking. To begin, each observation in the dataset is tagged as either an "inlier" or an "outlier". This label can be derived from domain expertise, human annotation, or synthetic injection of anomalies for experimental setups. The categorical features are then encoded using techniques such as one-hot, ordinal, or entity embeddings so that they can be processed by standard supervised learning models or outlier scoring algorithms. Outlier detection methods are then trained on these encoded features. Even though these methods are typically unsupervised, in this scenario, their outputs—namely, the anomaly scores — can be evaluated using the known labels. Evaluation metrics for this setting include: Precision@N (a variation of precision that is particularly useful in ranking problems like outlier detection for evaluating the top N most confident predictions rather than all predictions), F1-Score (harmonic mean of precision and recall for balancing both metrics, particularly useful when there's a tradeoff), AUROC (Area Under the Receiver Operating Characteristic Curve) (evaluates the model’s ability to distinguish between inliers and outliers across various thresholds, where a higher value indicates better separability). This setup allows for quantitative comparison of different models and hyperparameters. Because true outliers are known, models can be ranked, tuned, and selected with confidence.

Outlier Detection In Categorical Data Without Ground Truth Labels is a purely unsupervised learning task. This introduces significant challenges: since the true identity of outliers is unknown, models cannot be trained or validated using standard supervised metrics. Instead, evaluation shifts toward the intrinsic structure of the data and the behavior of anomaly scores. To begin, categorical data must be carefully encoded using strategies that retain their semantic meaning. Common encoding methods include one-hot encoding (to preserve disjoint category identity), ordinal encoding (for ordered categories), and entity embeddings (to capture latent similarity among categories). Once the data is numerically represented, various unsupervised algorithms can be applied to compute outlier scores. These scores reflect the degree of "outlierness" of each observation based on algorithm-specific logic such as isolation depth, density deviation, or cluster distance. In the absence of ground truth labels, the quality of these scores is evaluated using unsupervised score-based metrics. These metrics help assess whether the algorithm has meaningfully differentiated outliers from inliers in a data-driven way including Outlier Score Entropy (measures the unpredictability or uniformity in the distribution of outlier scores where a very low entropy may indicate that the model is not distinguishing between normal and anomalous observations), score variance (examines the spread of anomaly scores across all observations where a low variance suggests that the model assigns similar scores to most points, possibly indicating insensitivity to actual structure), silhouette score on outlier scores (clusters the outlier scores themselves into two or more groups and evaluates how well-separated these clusters are with a high silhouette score suggesting that the model produces score groupings that align with distinguishable data behavior, reinforcing the meaningfulness of its outlier assignments) and clustering stability (assesses how consistent the outlier groupings remain when the input data or model parameters are slightly perturbed with low variability across runs implying that the model is robust and not overly sensitive to sampling artifacts, which boosts confidence in the flagged anomalies). These unsupervised evaluation techniques offer a practical lens through which model performance can be judged, even in the complete absence of labeled anomalies. When multiple models consistently flag the same observations as anomalous, or when scores exhibit structured and stable separation, this further validates the relevance of the identified outliers. Ultimately, there is no single "correct" model in unsupervised outlier detection without ground truth. Instead, a combination of score distribution analysis, clustering behavior, consistency checks, and domain interpretability is used to triangulate the credibility of the results. This makes practitioner insight and a deep understanding of the domain especially important when applying these methods to categorical data.

1.1. Data Background ¶

An open Thyroid Disease Dataset from Kaggle (with all credits attributed to Jai Naru and Abuchi Onwuegbusi) was used for the analysis as consolidated from the following primary sources:

- Reference Repository entitled Differentiated Thyroid Cancer Recurrence from UC Irvine Machine Learning Repository

- Research Paper entitled Machine Learning for Risk Stratification of Thyroid Cancer Patients: a 15-year Cohort Study from the European Archives of Oto-Rhino-Laryngology

This study hypothesized that the various clinicopathological characteristics influence differentiated thyroid cancer recurrence between patients.

The dichotomous categorical variable for the study is:

- Recurred - Status of the patient (Yes, Recurrence of differentiated thyroid cancer | No, No recurrence of differentiated thyroid cancer)

The predictor variables for the study are:

- Age - Patient's age (Years)

- Gender - Patient's sex (M | F)

- Smoking - Indication of smoking (Yes | No)

- Hx Smoking - Indication of smoking history (Yes | No)

- Hx Radiotherapy - Indication of radiotherapy history for any condition (Yes | No)

- Thyroid Function - Status of thyroid function (Clinical Hyperthyroidism, Hypothyroidism | Subclinical Hyperthyroidism, Hypothyroidism | Euthyroid)

- Physical Examination - Findings from physical examination including palpation of the thyroid gland and surrounding structures (Normal | Diffuse Goiter | Multinodular Goiter | Single Nodular Goiter Left, Right)

- Adenopathy - Indication of enlarged lymph nodes in the neck region (No | Right | Extensive | Left | Bilateral | Posterior)

- Pathology - Specific thyroid cancer type as determined by pathology examination of biopsy samples (Follicular | Hurthel Cell | Micropapillary | Papillary)

- Focality - Indication if the cancer is limited to one location or present in multiple locations (Uni-Focal | Multi-Focal)

- Risk - Risk category of the cancer based on various factors, such as tumor size, extent of spread, and histological type (Low | Intermediate | High)

- T - Tumor classification based on its size and extent of invasion into nearby structures (T1a | T1b | T2 | T3a | T3b | T4a | T4b)

- N - Nodal classification indicating the involvement of lymph nodes (N0 | N1a | N1b)

- M - Metastasis classification indicating the presence or absence of distant metastases (M0 | M1)

- Stage - Overall stage of the cancer, typically determined by combining T, N, and M classifications (I | II | III | IVa | IVb)

- Response - Cancer's response to treatment (Biochemical Incomplete | Indeterminate | Excellent | Structural Incomplete)

While the original dataset was designed for a categorical classification task predicting thyroid cancer recurrence, this study repurposes it for an outlier detection framework. In this revised context, the original response variable will be excluded, and only a subset of predictors—specifically those capturing clinicopathological characteristics—will be retained. These predictors will be converted into binary categorical variables to standardize representation. A synthetic binary response variable will then be generated using a frequency-based tagging strategy, labeling rare combinations of category levels as outliers and the rest as inliers. The goal is to estimate outlier scores for each observation and assess model performance in both supervised settings (using the synthetic labels) and unsupervised settings (without labels). This approach allows for evaluating the effectiveness of categorical outlier detection methods in a medically relevant context where rare clinicopathological profiles may signify atypical or high-risk cases.

1.2. Data Description ¶

- The initial tabular dataset was comprised of 383 observations and 17 variables (including 1 target and 16 predictors).

- 383 rows (observations)

- 17 columns (variables)

- 1/17 target (categorical)

- Recurred

- 1/17 predictor (numeric)

- Age

- 16/17 predictor (categorical)

- Gender

- Smoking

- Hx_Smoking

- Hx_Radiotherapy

- Thyroid_Function

- Physical_Examination

- Adenopathy

- Pathology

- Focality

- Risk

- T

- N

- M

- Stage

- Response

- 1/17 target (categorical)

##################################

# Loading Python Libraries

##################################

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import joblib

import itertools

import os

import pickle

import plotly.express as px

import plotly.io as pio

pio.renderers.default = 'notebook_connected'

%matplotlib inline

from operator import truediv

from sklearn.preprocessing import LabelEncoder

from scipy.stats import chi2_contingency, entropy

from sklearn.cluster import KMeans

from sklearn.decomposition import PCA

from sklearn.manifold import TSNE

from sklearn.metrics import silhouette_score, roc_auc_score, precision_score, f1_score

from sklearn.model_selection import train_test_split, StratifiedShuffleSplit, ParameterGrid

import umap.umap_ as umap

from pyod.models.knn import KNN

from pyod.models.hbos import HBOS

from pyod.models.cblof import CBLOF

from pyod.models.iforest import IForest

import warnings

warnings.filterwarnings("ignore", category=FutureWarning, module="sklearn")

warnings.filterwarnings("ignore", category=UserWarning, module="umap")

##################################

# Defining file paths

##################################

DATASETS_ORIGINAL_PATH = r"datasets\original"

DATASETS_FINAL_PATH = r"datasets\final\complete"

DATASETS_FINAL_TRAIN_PATH = r"datasets\final\train"

DATASETS_FINAL_TRAIN_FEATURES_PATH = r"datasets\final\train\features"

DATASETS_FINAL_TRAIN_TARGET_PATH = r"datasets\final\train\target"

DATASETS_FINAL_VALIDATION_PATH = r"datasets\final\validation"

DATASETS_FINAL_VALIDATION_FEATURES_PATH = r"datasets\final\validation\features"

DATASETS_FINAL_VALIDATION_TARGET_PATH = r"datasets\final\validation\target"

DATASETS_FINAL_TEST_PATH = r"datasets\final\test"

DATASETS_FINAL_TEST_FEATURES_PATH = r"datasets\final\test\features"

DATASETS_FINAL_TEST_TARGET_PATH = r"datasets\final\test\target"

MODELS_PATH = r"models"

##################################

# Loading the dataset

# from the DATASETS_ORIGINAL_PATH

##################################

thyroid_cancer = pd.read_csv(os.path.join("..", DATASETS_ORIGINAL_PATH, "Thyroid_Diff.csv"))

##################################

# Performing a general exploration of the dataset

##################################

print('Dataset Dimensions: ')

display(thyroid_cancer.shape)

Dataset Dimensions:

(383, 17)

##################################

# Listing the column names and data types

##################################

print('Column Names and Data Types:')

display(thyroid_cancer.dtypes)

Column Names and Data Types:

Age int64 Gender object Smoking object Hx Smoking object Hx Radiotherapy object Thyroid Function object Physical Examination object Adenopathy object Pathology object Focality object Risk object T object N object M object Stage object Response object Recurred object dtype: object

##################################

# Renaming and standardizing the column names

# to replace blanks with undercores

##################################

thyroid_cancer.columns = thyroid_cancer.columns.str.replace(" ", "_")

##################################

# Taking a snapshot of the dataset

##################################

thyroid_cancer.head()

| Age | Gender | Smoking | Hx_Smoking | Hx_Radiotherapy | Thyroid_Function | Physical_Examination | Adenopathy | Pathology | Focality | Risk | T | N | M | Stage | Response | Recurred | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 27 | F | No | No | No | Euthyroid | Single nodular goiter-left | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Indeterminate | No |

| 1 | 34 | F | No | Yes | No | Euthyroid | Multinodular goiter | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 2 | 30 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 3 | 62 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 4 | 62 | F | No | No | No | Euthyroid | Multinodular goiter | No | Micropapillary | Multi-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

##################################

# Selecting categorical columns (both object and categorical types)

# and listing the unique categorical levels

##################################

cat_cols = thyroid_cancer.select_dtypes(include=["object", "category"]).columns

for col in cat_cols:

print(f"Categorical | Object Column: {col}")

print(thyroid_cancer[col].unique())

print("-" * 40)

Categorical | Object Column: Gender ['F' 'M'] ---------------------------------------- Categorical | Object Column: Smoking ['No' 'Yes'] ---------------------------------------- Categorical | Object Column: Hx_Smoking ['No' 'Yes'] ---------------------------------------- Categorical | Object Column: Hx_Radiotherapy ['No' 'Yes'] ---------------------------------------- Categorical | Object Column: Thyroid_Function ['Euthyroid' 'Clinical Hyperthyroidism' 'Clinical Hypothyroidism' 'Subclinical Hyperthyroidism' 'Subclinical Hypothyroidism'] ---------------------------------------- Categorical | Object Column: Physical_Examination ['Single nodular goiter-left' 'Multinodular goiter' 'Single nodular goiter-right' 'Normal' 'Diffuse goiter'] ---------------------------------------- Categorical | Object Column: Adenopathy ['No' 'Right' 'Extensive' 'Left' 'Bilateral' 'Posterior'] ---------------------------------------- Categorical | Object Column: Pathology ['Micropapillary' 'Papillary' 'Follicular' 'Hurthel cell'] ---------------------------------------- Categorical | Object Column: Focality ['Uni-Focal' 'Multi-Focal'] ---------------------------------------- Categorical | Object Column: Risk ['Low' 'Intermediate' 'High'] ---------------------------------------- Categorical | Object Column: T ['T1a' 'T1b' 'T2' 'T3a' 'T3b' 'T4a' 'T4b'] ---------------------------------------- Categorical | Object Column: N ['N0' 'N1b' 'N1a'] ---------------------------------------- Categorical | Object Column: M ['M0' 'M1'] ---------------------------------------- Categorical | Object Column: Stage ['I' 'II' 'IVB' 'III' 'IVA'] ---------------------------------------- Categorical | Object Column: Response ['Indeterminate' 'Excellent' 'Structural Incomplete' 'Biochemical Incomplete'] ---------------------------------------- Categorical | Object Column: Recurred ['No' 'Yes'] ----------------------------------------

##################################

# Correcting a category level

##################################

thyroid_cancer["Pathology"] = thyroid_cancer["Pathology"].replace("Hurthel cell", "Hurthle Cell")

##################################

# Setting the levels of the categorical variables

##################################

thyroid_cancer['Recurred'] = thyroid_cancer['Recurred'].astype('category')

thyroid_cancer['Recurred'] = thyroid_cancer['Recurred'].cat.set_categories(['No', 'Yes'], ordered=True)

thyroid_cancer['Gender'] = thyroid_cancer['Gender'].astype('category')

thyroid_cancer['Gender'] = thyroid_cancer['Gender'].cat.set_categories(['M', 'F'], ordered=True)

thyroid_cancer['Smoking'] = thyroid_cancer['Smoking'].astype('category')

thyroid_cancer['Smoking'] = thyroid_cancer['Smoking'].cat.set_categories(['No', 'Yes'], ordered=True)

thyroid_cancer['Hx_Smoking'] = thyroid_cancer['Hx_Smoking'].astype('category')

thyroid_cancer['Hx_Smoking'] = thyroid_cancer['Hx_Smoking'].cat.set_categories(['No', 'Yes'], ordered=True)

thyroid_cancer['Hx_Radiotherapy'] = thyroid_cancer['Hx_Radiotherapy'].astype('category')

thyroid_cancer['Hx_Radiotherapy'] = thyroid_cancer['Hx_Radiotherapy'].cat.set_categories(['No', 'Yes'], ordered=True)

thyroid_cancer['Thyroid_Function'] = thyroid_cancer['Thyroid_Function'].astype('category')

thyroid_cancer['Thyroid_Function'] = thyroid_cancer['Thyroid_Function'].cat.set_categories(['Euthyroid', 'Subclinical Hypothyroidism', 'Subclinical Hyperthyroidism', 'Clinical Hypothyroidism', 'Clinical Hyperthyroidism'], ordered=True)

thyroid_cancer['Physical_Examination'] = thyroid_cancer['Physical_Examination'].astype('category')

thyroid_cancer['Physical_Examination'] = thyroid_cancer['Physical_Examination'].cat.set_categories(['Normal', 'Single nodular goiter-left', 'Single nodular goiter-right', 'Multinodular goiter', 'Diffuse goiter'], ordered=True)

thyroid_cancer['Adenopathy'] = thyroid_cancer['Adenopathy'].astype('category')

thyroid_cancer['Adenopathy'] = thyroid_cancer['Adenopathy'].cat.set_categories(['No', 'Left', 'Right', 'Bilateral', 'Posterior', 'Extensive'], ordered=True)

thyroid_cancer['Pathology'] = thyroid_cancer['Pathology'].astype('category')

thyroid_cancer['Pathology'] = thyroid_cancer['Pathology'].cat.set_categories(['Hurthle Cell', 'Follicular', 'Micropapillary', 'Papillary'], ordered=True)

thyroid_cancer['Focality'] = thyroid_cancer['Focality'].astype('category')

thyroid_cancer['Focality'] = thyroid_cancer['Focality'].cat.set_categories(['Uni-Focal', 'Multi-Focal'], ordered=True)

thyroid_cancer['Risk'] = thyroid_cancer['Risk'].astype('category')

thyroid_cancer['Risk'] = thyroid_cancer['Risk'].cat.set_categories(['Low', 'Intermediate', 'High'], ordered=True)

thyroid_cancer['T'] = thyroid_cancer['T'].astype('category')

thyroid_cancer['T'] = thyroid_cancer['T'].cat.set_categories(['T1a', 'T1b', 'T2', 'T3a', 'T3b', 'T4a', 'T4b'], ordered=True)

thyroid_cancer['N'] = thyroid_cancer['N'].astype('category')

thyroid_cancer['N'] = thyroid_cancer['N'].cat.set_categories(['N0', 'N1a', 'N1b'], ordered=True)

thyroid_cancer['M'] = thyroid_cancer['M'].astype('category')

thyroid_cancer['M'] = thyroid_cancer['M'].cat.set_categories(['M0', 'M1'], ordered=True)

thyroid_cancer['Stage'] = thyroid_cancer['Stage'].astype('category')

thyroid_cancer['Stage'] = thyroid_cancer['Stage'].cat.set_categories(['I', 'II', 'III', 'IVA', 'IVB'], ordered=True)

thyroid_cancer['Response'] = thyroid_cancer['Response'].astype('category')

thyroid_cancer['Response'] = thyroid_cancer['Response'].cat.set_categories(['Excellent', 'Structural Incomplete', 'Biochemical Incomplete', 'Indeterminate'], ordered=True)

##################################

# Performing a general exploration of the numeric variables

##################################

print('Numeric Variable Summary:')

display(thyroid_cancer.describe(include='number').transpose())

Numeric Variable Summary:

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| Age | 383.0 | 40.866841 | 15.134494 | 15.0 | 29.0 | 37.0 | 51.0 | 82.0 |

##################################

# Performing a general exploration of the categorical variables

##################################

print('Categorical Variable Summary:')

display(thyroid_cancer.describe(include='category').transpose())

Categorical Variable Summary:

| count | unique | top | freq | |

|---|---|---|---|---|

| Gender | 383 | 2 | F | 312 |

| Smoking | 383 | 2 | No | 334 |

| Hx_Smoking | 383 | 2 | No | 355 |

| Hx_Radiotherapy | 383 | 2 | No | 376 |

| Thyroid_Function | 383 | 5 | Euthyroid | 332 |

| Physical_Examination | 383 | 5 | Single nodular goiter-right | 140 |

| Adenopathy | 383 | 6 | No | 277 |

| Pathology | 383 | 4 | Papillary | 287 |

| Focality | 383 | 2 | Uni-Focal | 247 |

| Risk | 383 | 3 | Low | 249 |

| T | 383 | 7 | T2 | 151 |

| N | 383 | 3 | N0 | 268 |

| M | 383 | 2 | M0 | 365 |

| Stage | 383 | 5 | I | 333 |

| Response | 383 | 4 | Excellent | 208 |

| Recurred | 383 | 2 | No | 275 |

##################################

# Performing a general exploration of the categorical variable levels

# based on the ordered categories

##################################

ordered_cat_cols = thyroid_cancer.select_dtypes(include=["category"]).columns

for col in ordered_cat_cols:

print(f"Column: {col}")

print("Absolute Frequencies:")

print(thyroid_cancer[col].value_counts().reindex(thyroid_cancer[col].cat.categories))

print("\nNormalized Frequencies:")

print(thyroid_cancer[col].value_counts(normalize=True).reindex(thyroid_cancer[col].cat.categories))

print("-" * 50)

Column: Gender Absolute Frequencies: M 71 F 312 Name: count, dtype: int64 Normalized Frequencies: M 0.185379 F 0.814621 Name: proportion, dtype: float64 -------------------------------------------------- Column: Smoking Absolute Frequencies: No 334 Yes 49 Name: count, dtype: int64 Normalized Frequencies: No 0.872063 Yes 0.127937 Name: proportion, dtype: float64 -------------------------------------------------- Column: Hx_Smoking Absolute Frequencies: No 355 Yes 28 Name: count, dtype: int64 Normalized Frequencies: No 0.926893 Yes 0.073107 Name: proportion, dtype: float64 -------------------------------------------------- Column: Hx_Radiotherapy Absolute Frequencies: No 376 Yes 7 Name: count, dtype: int64 Normalized Frequencies: No 0.981723 Yes 0.018277 Name: proportion, dtype: float64 -------------------------------------------------- Column: Thyroid_Function Absolute Frequencies: Euthyroid 332 Subclinical Hypothyroidism 14 Subclinical Hyperthyroidism 5 Clinical Hypothyroidism 12 Clinical Hyperthyroidism 20 Name: count, dtype: int64 Normalized Frequencies: Euthyroid 0.866841 Subclinical Hypothyroidism 0.036554 Subclinical Hyperthyroidism 0.013055 Clinical Hypothyroidism 0.031332 Clinical Hyperthyroidism 0.052219 Name: proportion, dtype: float64 -------------------------------------------------- Column: Physical_Examination Absolute Frequencies: Normal 7 Single nodular goiter-left 89 Single nodular goiter-right 140 Multinodular goiter 140 Diffuse goiter 7 Name: count, dtype: int64 Normalized Frequencies: Normal 0.018277 Single nodular goiter-left 0.232376 Single nodular goiter-right 0.365535 Multinodular goiter 0.365535 Diffuse goiter 0.018277 Name: proportion, dtype: float64 -------------------------------------------------- Column: Adenopathy Absolute Frequencies: No 277 Left 17 Right 48 Bilateral 32 Posterior 2 Extensive 7 Name: count, dtype: int64 Normalized Frequencies: No 0.723238 Left 0.044386 Right 0.125326 Bilateral 0.083551 Posterior 0.005222 Extensive 0.018277 Name: proportion, dtype: float64 -------------------------------------------------- Column: Pathology Absolute Frequencies: Hurthle Cell 20 Follicular 28 Micropapillary 48 Papillary 287 Name: count, dtype: int64 Normalized Frequencies: Hurthle Cell 0.052219 Follicular 0.073107 Micropapillary 0.125326 Papillary 0.749347 Name: proportion, dtype: float64 -------------------------------------------------- Column: Focality Absolute Frequencies: Uni-Focal 247 Multi-Focal 136 Name: count, dtype: int64 Normalized Frequencies: Uni-Focal 0.644909 Multi-Focal 0.355091 Name: proportion, dtype: float64 -------------------------------------------------- Column: Risk Absolute Frequencies: Low 249 Intermediate 102 High 32 Name: count, dtype: int64 Normalized Frequencies: Low 0.650131 Intermediate 0.266319 High 0.083551 Name: proportion, dtype: float64 -------------------------------------------------- Column: T Absolute Frequencies: T1a 49 T1b 43 T2 151 T3a 96 T3b 16 T4a 20 T4b 8 Name: count, dtype: int64 Normalized Frequencies: T1a 0.127937 T1b 0.112272 T2 0.394256 T3a 0.250653 T3b 0.041775 T4a 0.052219 T4b 0.020888 Name: proportion, dtype: float64 -------------------------------------------------- Column: N Absolute Frequencies: Hurthle Cell 20 Follicular 28 Micropapillary 48 Papillary 287 Name: count, dtype: int64 Normalized Frequencies: Hurthle Cell 0.052219 Follicular 0.073107 Micropapillary 0.125326 Papillary 0.749347 Name: proportion, dtype: float64 -------------------------------------------------- Column: Focality Absolute Frequencies: Uni-Focal 247 Multi-Focal 136 Name: count, dtype: int64 Normalized Frequencies: Uni-Focal 0.644909 Multi-Focal 0.355091 Name: proportion, dtype: float64 -------------------------------------------------- Column: Risk Absolute Frequencies: Low 249 Intermediate 102 High 32 Name: count, dtype: int64 Normalized Frequencies: Low 0.650131 Intermediate 0.266319 High 0.083551 Name: proportion, dtype: float64 -------------------------------------------------- Column: T Absolute Frequencies: T1a 49 T1b 43 T2 151 T3a 96 T3b 16 T4a 20 T4b 8 Name: count, dtype: int64 Normalized Frequencies: T1a 0.127937 T1b 0.112272 T2 0.394256 T3a 0.250653 T3b 0.041775 T4a 0.052219 T4b 0.020888 Name: proportion, dtype: float64 -------------------------------------------------- Column: N Absolute Frequencies: N0 268 N1a 22 N1b 93 Name: count, dtype: int64 Normalized Frequencies: N0 0.699739 N1a 0.057441 N1b 0.242820 Name: proportion, dtype: float64 -------------------------------------------------- Column: M Absolute Frequencies: M0 365 M1 18 Name: count, dtype: int64 Normalized Frequencies: M0 0.953003 M1 0.046997 Name: proportion, dtype: float64 -------------------------------------------------- Column: Stage Absolute Frequencies: I 333 II 32 III 4 IVA 3 IVB 11 Name: count, dtype: int64 Normalized Frequencies: I 0.869452 II 0.083551 III 0.010444 IVA 0.007833 IVB 0.028721 Name: proportion, dtype: float64 -------------------------------------------------- Column: Response Absolute Frequencies: Excellent 208 Structural Incomplete 91 Biochemical Incomplete 23 Indeterminate 61 Name: count, dtype: int64 Normalized Frequencies: Excellent 0.543081 Structural Incomplete 0.237598 Biochemical Incomplete 0.060052 Indeterminate 0.159269 Name: proportion, dtype: float64 -------------------------------------------------- Column: Recurred Absolute Frequencies: No 275 Yes 108 Name: count, dtype: int64 Normalized Frequencies: No 0.718016 Yes 0.281984 Name: proportion, dtype: float64 --------------------------------------------------

1.3. Data Quality Assessment ¶

Data quality findings based on assessment are as follows:

- A total of 19 duplicated rows were identified.

- In total, 34 observations were affected, consisting of 16 unique occurrences and 19 subsequent duplicates.

- These 19 duplicates spanned 16 distinct variations, meaning some variations had multiple duplicates.

- To clean the dataset, all 19 duplicate rows were removed, retaining only the first occurrence of each of the 16 unique variations.

- No missing data noted for any variable with Null.Count>0 and Fill.Rate<1.0.

- Low variance observed for 8 variables with First.Second.Mode.Ratio>5.

- Hx_Radiotherapy: First.Second.Mode.Ratio = 51.000 (comprised 2 category levels)

- M: First.Second.Mode.Ratio = 19.222 (comprised 2 category levels)

- Thyroid_Function: First.Second.Mode.Ratio = 15.650 (comprised 5 category levels)

- Hx_Smoking: First.Second.Mode.Ratio = 12.000 (comprised 2 category levels)

- Stage: First.Second.Mode.Ratio = 9.812 (comprised 5 category levels)

- Smoking: First.Second.Mode.Ratio = 6.428 (comprised 2 category levels)

- Pathology: First.Second.Mode.Ratio = 6.022 (comprised 4 category levels)

- Adenopathy: First.Second.Mode.Ratio = 5.375 (comprised 5 category levels)

- No low variance observed for any variable with Unique.Count.Ratio>10.

- No high skewness observed for any variable with Skewness>3 or Skewness<(-3).

##################################

# Counting the number of duplicated rows

##################################

thyroid_cancer.duplicated().sum()

np.int64(19)

##################################

# Exploring the duplicated rows

##################################

duplicated_rows = thyroid_cancer[thyroid_cancer.duplicated(keep=False)]

display(duplicated_rows)

| Age | Gender | Smoking | Hx_Smoking | Hx_Radiotherapy | Thyroid_Function | Physical_Examination | Adenopathy | Pathology | Focality | Risk | T | N | M | Stage | Response | Recurred | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 8 | 51 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 9 | 40 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 22 | 36 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 32 | 36 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 38 | 40 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 40 | 51 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No |

| 61 | 35 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No |

| 66 | 35 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No |

| 67 | 51 | F | No | No | No | Euthyroid | Single nodular goiter-left | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No |

| 69 | 51 | F | No | No | No | Euthyroid | Single nodular goiter-left | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No |

| 73 | 29 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No |

| 77 | 29 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No |

| 106 | 26 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 110 | 31 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 113 | 32 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 115 | 37 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 119 | 28 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 120 | 37 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 121 | 26 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 123 | 28 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 132 | 32 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 136 | 21 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 137 | 32 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 138 | 26 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 142 | 42 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 161 | 22 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 166 | 31 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 168 | 21 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 170 | 38 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 175 | 34 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 178 | 38 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 183 | 26 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 187 | 34 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 189 | 42 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

| 196 | 22 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No |

##################################

# Checking if duplicated rows have identical values across all columns

##################################

num_unique_dup_rows = duplicated_rows.drop_duplicates().shape[0]

num_total_dup_rows = duplicated_rows.shape[0]

if num_unique_dup_rows == 1:

print("All duplicated rows have the same values across all columns.")

else:

print(f"There are {num_unique_dup_rows} unique versions among the {num_total_dup_rows} duplicated rows.")

There are 16 unique versions among the 35 duplicated rows.

##################################

# Counting the unique variations among duplicated rows

##################################

unique_dup_variations = duplicated_rows.drop_duplicates()

variation_counts = duplicated_rows.value_counts().reset_index(name="Count")

print("Unique duplicated row variations and their counts:")

display(variation_counts)

Unique duplicated row variations and their counts:

| Age | Gender | Smoking | Hx_Smoking | Hx_Radiotherapy | Thyroid_Function | Physical_Examination | Adenopathy | Pathology | Focality | Risk | T | N | M | Stage | Response | Recurred | Count | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 26 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 4 |

| 1 | 32 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 3 |

| 2 | 22 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 2 |

| 3 | 21 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 2 |

| 4 | 28 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 2 |

| 5 | 29 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No | 2 |

| 6 | 31 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 2 |

| 7 | 34 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 2 |

| 8 | 35 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No | 2 |

| 9 | 36 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No | 2 |

| 10 | 37 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 2 |

| 11 | 38 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 2 |

| 12 | 40 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No | 2 |

| 13 | 42 | F | No | No | No | Euthyroid | Multinodular goiter | No | Papillary | Uni-Focal | Low | T2 | N0 | M0 | I | Excellent | No | 2 |

| 14 | 51 | F | No | No | No | Euthyroid | Single nodular goiter-left | No | Papillary | Uni-Focal | Low | T1b | N0 | M0 | I | Excellent | No | 2 |

| 15 | 51 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No | 2 |

##################################

# Removing the duplicated rows and

# retaining only the first occurrence

##################################

thyroid_cancer_row_filtered = thyroid_cancer.drop_duplicates(keep="first")

print('Dataset Dimensions: ')

display(thyroid_cancer_row_filtered.shape)

Dataset Dimensions:

(364, 17)

##################################

# Gathering the data types for each column

##################################

data_type_list = list(thyroid_cancer_row_filtered.dtypes)

##################################

# Gathering the variable names for each column

##################################

variable_name_list = list(thyroid_cancer_row_filtered.columns)

##################################

# Gathering the number of observations for each column

##################################

row_count_list = list([len(thyroid_cancer_row_filtered)] * len(thyroid_cancer_row_filtered.columns))

##################################

# Gathering the number of missing data for each column

##################################

null_count_list = list(thyroid_cancer_row_filtered.isna().sum(axis=0))

##################################

# Gathering the number of non-missing data for each column

##################################

non_null_count_list = list(thyroid_cancer_row_filtered.count())

##################################

# Gathering the missing data percentage for each column

##################################

fill_rate_list = map(truediv, non_null_count_list, row_count_list)

##################################

# Formulating the summary

# for all columns

##################################

all_column_quality_summary = pd.DataFrame(zip(variable_name_list,

data_type_list,

row_count_list,

non_null_count_list,

null_count_list,

fill_rate_list),

columns=['Column.Name',

'Column.Type',

'Row.Count',

'Non.Null.Count',

'Null.Count',

'Fill.Rate'])

display(all_column_quality_summary)

| Column.Name | Column.Type | Row.Count | Non.Null.Count | Null.Count | Fill.Rate | |

|---|---|---|---|---|---|---|

| 0 | Age | int64 | 364 | 364 | 0 | 1.0 |

| 1 | Gender | category | 364 | 364 | 0 | 1.0 |

| 2 | Smoking | category | 364 | 364 | 0 | 1.0 |

| 3 | Hx_Smoking | category | 364 | 364 | 0 | 1.0 |

| 4 | Hx_Radiotherapy | category | 364 | 364 | 0 | 1.0 |

| 5 | Thyroid_Function | category | 364 | 364 | 0 | 1.0 |

| 6 | Physical_Examination | category | 364 | 364 | 0 | 1.0 |

| 7 | Adenopathy | category | 364 | 364 | 0 | 1.0 |

| 8 | Pathology | category | 364 | 364 | 0 | 1.0 |

| 9 | Focality | category | 364 | 364 | 0 | 1.0 |

| 10 | Risk | category | 364 | 364 | 0 | 1.0 |

| 11 | T | category | 364 | 364 | 0 | 1.0 |

| 12 | N | category | 364 | 364 | 0 | 1.0 |

| 13 | M | category | 364 | 364 | 0 | 1.0 |

| 14 | Stage | category | 364 | 364 | 0 | 1.0 |

| 15 | Response | category | 364 | 364 | 0 | 1.0 |

| 16 | Recurred | category | 364 | 364 | 0 | 1.0 |

##################################

# Counting the number of columns

# with Fill.Rate < 1.00

##################################

len(all_column_quality_summary[(all_column_quality_summary['Fill.Rate']<1)])

0

##################################

# Identifying the rows

# with Fill.Rate < 0.90

##################################

column_low_fill_rate = all_column_quality_summary[(all_column_quality_summary['Fill.Rate']<0.90)]

##################################

# Gathering the indices for each observation

##################################

row_index_list = thyroid_cancer_row_filtered.index

##################################

# Gathering the number of columns for each observation

##################################

column_count_list = list([len(thyroid_cancer_row_filtered.columns)] * len(thyroid_cancer_row_filtered))

##################################

# Gathering the number of missing data for each row

##################################

null_row_list = list(thyroid_cancer_row_filtered.isna().sum(axis=1))

##################################

# Gathering the missing data percentage for each column

##################################

missing_rate_list = map(truediv, null_row_list, column_count_list)

##################################

# Identifying the rows

# with missing data

##################################

all_row_quality_summary = pd.DataFrame(zip(row_index_list,

column_count_list,

null_row_list,

missing_rate_list),

columns=['Row.Name',

'Column.Count',

'Null.Count',

'Missing.Rate'])

display(all_row_quality_summary)

| Row.Name | Column.Count | Null.Count | Missing.Rate | |

|---|---|---|---|---|

| 0 | 0 | 17 | 0 | 0.0 |

| 1 | 1 | 17 | 0 | 0.0 |

| 2 | 2 | 17 | 0 | 0.0 |

| 3 | 3 | 17 | 0 | 0.0 |

| 4 | 4 | 17 | 0 | 0.0 |

| ... | ... | ... | ... | ... |

| 359 | 378 | 17 | 0 | 0.0 |

| 360 | 379 | 17 | 0 | 0.0 |

| 361 | 380 | 17 | 0 | 0.0 |

| 362 | 381 | 17 | 0 | 0.0 |

| 363 | 382 | 17 | 0 | 0.0 |

364 rows × 4 columns

##################################

# Counting the number of rows

# with Missing.Rate > 0.00

##################################

len(all_row_quality_summary[(all_row_quality_summary['Missing.Rate']>0.00)])

0

##################################

# Formulating the dataset

# with numeric columns only

##################################

thyroid_cancer_numeric = thyroid_cancer_row_filtered.select_dtypes(include='number')

##################################

# Gathering the variable names for each numeric column

##################################

numeric_variable_name_list = thyroid_cancer_numeric.columns

##################################

# Gathering the minimum value for each numeric column

##################################

numeric_minimum_list = thyroid_cancer_numeric.min()

##################################

# Gathering the mean value for each numeric column

##################################

numeric_mean_list = thyroid_cancer_numeric.mean()

##################################

# Gathering the median value for each numeric column

##################################

numeric_median_list = thyroid_cancer_numeric.median()

##################################

# Gathering the maximum value for each numeric column

##################################

numeric_maximum_list = thyroid_cancer_numeric.max()

##################################

# Gathering the first mode values for each numeric column

##################################

numeric_first_mode_list = [thyroid_cancer_row_filtered[x].value_counts(dropna=True).index.tolist()[0] for x in thyroid_cancer_numeric]

##################################

# Gathering the second mode values for each numeric column

##################################

numeric_second_mode_list = [thyroid_cancer_row_filtered[x].value_counts(dropna=True).index.tolist()[1] for x in thyroid_cancer_numeric]

##################################

# Gathering the count of first mode values for each numeric column

##################################

numeric_first_mode_count_list = [thyroid_cancer_numeric[x].isin([thyroid_cancer_row_filtered[x].value_counts(dropna=True).index.tolist()[0]]).sum() for x in thyroid_cancer_numeric]

##################################

# Gathering the count of second mode values for each numeric column

##################################

numeric_second_mode_count_list = [thyroid_cancer_numeric[x].isin([thyroid_cancer_row_filtered[x].value_counts(dropna=True).index.tolist()[1]]).sum() for x in thyroid_cancer_numeric]

##################################

# Gathering the first mode to second mode ratio for each numeric column

##################################

numeric_first_second_mode_ratio_list = map(truediv, numeric_first_mode_count_list, numeric_second_mode_count_list)

##################################

# Gathering the count of unique values for each numeric column

##################################

numeric_unique_count_list = thyroid_cancer_numeric.nunique(dropna=True)

##################################

# Gathering the number of observations for each numeric column

##################################

numeric_row_count_list = list([len(thyroid_cancer_numeric)] * len(thyroid_cancer_numeric.columns))

##################################

# Gathering the unique to count ratio for each numeric column

##################################

numeric_unique_count_ratio_list = map(truediv, numeric_unique_count_list, numeric_row_count_list)

##################################

# Gathering the skewness value for each numeric column

##################################

numeric_skewness_list = thyroid_cancer_numeric.skew()

##################################

# Gathering the kurtosis value for each numeric column

##################################

numeric_kurtosis_list = thyroid_cancer_numeric.kurtosis()

##################################

# Generating a column quality summary for the numeric column

##################################

numeric_column_quality_summary = pd.DataFrame(zip(numeric_variable_name_list,

numeric_minimum_list,

numeric_mean_list,

numeric_median_list,

numeric_maximum_list,

numeric_first_mode_list,

numeric_second_mode_list,

numeric_first_mode_count_list,

numeric_second_mode_count_list,

numeric_first_second_mode_ratio_list,

numeric_unique_count_list,

numeric_row_count_list,

numeric_unique_count_ratio_list,

numeric_skewness_list,

numeric_kurtosis_list),

columns=['Numeric.Column.Name',

'Minimum',

'Mean',

'Median',

'Maximum',

'First.Mode',

'Second.Mode',

'First.Mode.Count',

'Second.Mode.Count',

'First.Second.Mode.Ratio',

'Unique.Count',

'Row.Count',

'Unique.Count.Ratio',

'Skewness',

'Kurtosis'])

display(numeric_column_quality_summary)

| Numeric.Column.Name | Minimum | Mean | Median | Maximum | First.Mode | Second.Mode | First.Mode.Count | Second.Mode.Count | First.Second.Mode.Ratio | Unique.Count | Row.Count | Unique.Count.Ratio | Skewness | Kurtosis | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Age | 15 | 41.25 | 38.0 | 82 | 31 | 27 | 21 | 13 | 1.615385 | 65 | 364 | 0.178571 | 0.678269 | -0.359255 |

##################################

# Counting the number of numeric columns

# with First.Second.Mode.Ratio > 5.00

##################################

len(numeric_column_quality_summary[(numeric_column_quality_summary['First.Second.Mode.Ratio']>5)])

0

##################################

# Counting the number of numeric columns

# with Unique.Count.Ratio > 10.00

##################################

len(numeric_column_quality_summary[(numeric_column_quality_summary['Unique.Count.Ratio']>10)])

0

##################################

# Counting the number of numeric columns

# with Skewness > 3.00 or Skewness < -3.00

##################################

len(numeric_column_quality_summary[(numeric_column_quality_summary['Skewness']>3) | (numeric_column_quality_summary['Skewness']<(-3))])

0

##################################

# Formulating the dataset

# with categorical columns only

##################################

thyroid_cancer_categorical = thyroid_cancer_row_filtered.select_dtypes(include='category')

##################################

# Gathering the variable names for the categorical column

##################################

categorical_variable_name_list = thyroid_cancer_categorical.columns

##################################

# Gathering the first mode values for each categorical column

##################################

categorical_first_mode_list = [thyroid_cancer_row_filtered[x].value_counts().index.tolist()[0] for x in thyroid_cancer_categorical]

##################################

# Gathering the second mode values for each categorical column

##################################

categorical_second_mode_list = [thyroid_cancer_row_filtered[x].value_counts().index.tolist()[1] for x in thyroid_cancer_categorical]

##################################

# Gathering the count of first mode values for each categorical column

##################################

categorical_first_mode_count_list = [thyroid_cancer_categorical[x].isin([thyroid_cancer_row_filtered[x].value_counts(dropna=True).index.tolist()[0]]).sum() for x in thyroid_cancer_categorical]

##################################

# Gathering the count of second mode values for each categorical column

##################################

categorical_second_mode_count_list = [thyroid_cancer_categorical[x].isin([thyroid_cancer_row_filtered[x].value_counts(dropna=True).index.tolist()[1]]).sum() for x in thyroid_cancer_categorical]

##################################

# Gathering the first mode to second mode ratio for each categorical column

##################################

categorical_first_second_mode_ratio_list = map(truediv, categorical_first_mode_count_list, categorical_second_mode_count_list)

##################################

# Gathering the count of unique values for each categorical column

##################################

categorical_unique_count_list = thyroid_cancer_categorical.nunique(dropna=True)

##################################

# Gathering the number of observations for each categorical column

##################################

categorical_row_count_list = list([len(thyroid_cancer_categorical)] * len(thyroid_cancer_categorical.columns))

##################################

# Gathering the unique to count ratio for each categorical column

##################################

categorical_unique_count_ratio_list = map(truediv, categorical_unique_count_list, categorical_row_count_list)

##################################

# Generating a column quality summary for the categorical columns

##################################

categorical_column_quality_summary = pd.DataFrame(zip(categorical_variable_name_list,

categorical_first_mode_list,

categorical_second_mode_list,

categorical_first_mode_count_list,

categorical_second_mode_count_list,

categorical_first_second_mode_ratio_list,

categorical_unique_count_list,

categorical_row_count_list,

categorical_unique_count_ratio_list),

columns=['Categorical.Column.Name',

'First.Mode',

'Second.Mode',

'First.Mode.Count',

'Second.Mode.Count',

'First.Second.Mode.Ratio',

'Unique.Count',

'Row.Count',

'Unique.Count.Ratio'])

display(categorical_column_quality_summary)

| Categorical.Column.Name | First.Mode | Second.Mode | First.Mode.Count | Second.Mode.Count | First.Second.Mode.Ratio | Unique.Count | Row.Count | Unique.Count.Ratio | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Gender | F | M | 293 | 71 | 4.126761 | 2 | 364 | 0.005495 |

| 1 | Smoking | No | Yes | 315 | 49 | 6.428571 | 2 | 364 | 0.005495 |

| 2 | Hx_Smoking | No | Yes | 336 | 28 | 12.000000 | 2 | 364 | 0.005495 |

| 3 | Hx_Radiotherapy | No | Yes | 357 | 7 | 51.000000 | 2 | 364 | 0.005495 |

| 4 | Thyroid_Function | Euthyroid | Clinical Hyperthyroidism | 313 | 20 | 15.650000 | 5 | 364 | 0.013736 |

| 5 | Physical_Examination | Multinodular goiter | Single nodular goiter-right | 135 | 127 | 1.062992 | 5 | 364 | 0.013736 |

| 6 | Adenopathy | No | Right | 258 | 48 | 5.375000 | 6 | 364 | 0.016484 |

| 7 | Pathology | Papillary | Micropapillary | 271 | 45 | 6.022222 | 4 | 364 | 0.010989 |

| 8 | Focality | Uni-Focal | Multi-Focal | 228 | 136 | 1.676471 | 2 | 364 | 0.005495 |

| 9 | Risk | Low | Intermediate | 230 | 102 | 2.254902 | 3 | 364 | 0.008242 |

| 10 | T | T2 | T3a | 138 | 96 | 1.437500 | 7 | 364 | 0.019231 |

| 11 | N | N0 | N1b | 249 | 93 | 2.677419 | 3 | 364 | 0.008242 |

| 12 | M | M0 | M1 | 346 | 18 | 19.222222 | 2 | 364 | 0.005495 |

| 13 | Stage | I | II | 314 | 32 | 9.812500 | 5 | 364 | 0.013736 |

| 14 | Response | Excellent | Structural Incomplete | 189 | 91 | 2.076923 | 4 | 364 | 0.010989 |

| 15 | Recurred | No | Yes | 256 | 108 | 2.370370 | 2 | 364 | 0.005495 |

##################################

# Counting the number of categorical columns

# with First.Second.Mode.Ratio > 5.00

##################################

len(categorical_column_quality_summary[(categorical_column_quality_summary['First.Second.Mode.Ratio']>5)])

8

##################################

# Identifying the categorical columns

# with First.Second.Mode.Ratio > 5.00

##################################

display(categorical_column_quality_summary[(categorical_column_quality_summary['First.Second.Mode.Ratio']>5)].sort_values(by=['First.Second.Mode.Ratio'], ascending=False))

| Categorical.Column.Name | First.Mode | Second.Mode | First.Mode.Count | Second.Mode.Count | First.Second.Mode.Ratio | Unique.Count | Row.Count | Unique.Count.Ratio | |

|---|---|---|---|---|---|---|---|---|---|

| 3 | Hx_Radiotherapy | No | Yes | 357 | 7 | 51.000000 | 2 | 364 | 0.005495 |

| 12 | M | M0 | M1 | 346 | 18 | 19.222222 | 2 | 364 | 0.005495 |

| 4 | Thyroid_Function | Euthyroid | Clinical Hyperthyroidism | 313 | 20 | 15.650000 | 5 | 364 | 0.013736 |

| 2 | Hx_Smoking | No | Yes | 336 | 28 | 12.000000 | 2 | 364 | 0.005495 |

| 13 | Stage | I | II | 314 | 32 | 9.812500 | 5 | 364 | 0.013736 |

| 1 | Smoking | No | Yes | 315 | 49 | 6.428571 | 2 | 364 | 0.005495 |

| 7 | Pathology | Papillary | Micropapillary | 271 | 45 | 6.022222 | 4 | 364 | 0.010989 |

| 6 | Adenopathy | No | Right | 258 | 48 | 5.375000 | 6 | 364 | 0.016484 |

##################################

# Counting the number of categorical columns

# with Unique.Count.Ratio > 10.00

##################################

len(categorical_column_quality_summary[(categorical_column_quality_summary['Unique.Count.Ratio']>10)])

0

1.4. Data Preprocessing ¶

1.4.1 Ordinal Binning ¶

- The variable Age was applied with ordinal binning to transform from a numeric to a binary categorical predictor named Age_Group:

- Age_Group:

- 258 Age_Group=<50: 70.87%

- 106 Age_Group=50+: 29.12%

- Age_Group:

- Certain unnecessary columns were excluded as follows:

- Predictor variable Age was replaced with Age_Group

- Response variable Recurred will not be used in the context of the analysis

- Certain predictor columns were similarly excluded as noted with extremely low variance containing categories with very few or almost no variations across observations:

- Hx_Smoking

- Hx_Radiotherapy

- M

##################################

# Creating a dataset copy

# of the row filtered data

##################################

thyroid_cancer_baseline = thyroid_cancer_row_filtered.copy()

##################################

# Defining bins and labels

##################################

bins = [0, 50, float('inf')]

labels = ['<50', '50+']

##################################

# Creating ordinal bins

# for the numeric column

##################################

thyroid_cancer_baseline['Age_Group'] = pd.cut(thyroid_cancer_baseline['Age'], bins=bins, labels=labels, right=False)

thyroid_cancer_baseline['Age_Group'] = pd.Categorical(thyroid_cancer_baseline['Age_Group'], categories=labels, ordered=True)

display(thyroid_cancer_baseline)

| Age | Gender | Smoking | Hx_Smoking | Hx_Radiotherapy | Thyroid_Function | Physical_Examination | Adenopathy | Pathology | Focality | Risk | T | N | M | Stage | Response | Recurred | Age_Group | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 27 | F | No | No | No | Euthyroid | Single nodular goiter-left | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Indeterminate | No | <50 |

| 1 | 34 | F | No | Yes | No | Euthyroid | Multinodular goiter | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No | <50 |

| 2 | 30 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No | <50 |

| 3 | 62 | F | No | No | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | M0 | I | Excellent | No | 50+ |

| 4 | 62 | F | No | No | No | Euthyroid | Multinodular goiter | No | Micropapillary | Multi-Focal | Low | T1a | N0 | M0 | I | Excellent | No | 50+ |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 378 | 72 | M | Yes | Yes | Yes | Euthyroid | Single nodular goiter-right | Right | Papillary | Uni-Focal | High | T4b | N1b | M1 | IVB | Biochemical Incomplete | Yes | 50+ |

| 379 | 81 | M | Yes | No | Yes | Euthyroid | Multinodular goiter | Extensive | Papillary | Multi-Focal | High | T4b | N1b | M1 | IVB | Structural Incomplete | Yes | 50+ |

| 380 | 72 | M | Yes | Yes | No | Euthyroid | Multinodular goiter | Bilateral | Papillary | Multi-Focal | High | T4b | N1b | M1 | IVB | Structural Incomplete | Yes | 50+ |

| 381 | 61 | M | Yes | Yes | Yes | Clinical Hyperthyroidism | Multinodular goiter | Extensive | Hurthle Cell | Multi-Focal | High | T4b | N1b | M0 | IVA | Structural Incomplete | Yes | 50+ |

| 382 | 67 | M | Yes | No | No | Euthyroid | Multinodular goiter | Bilateral | Papillary | Multi-Focal | High | T4b | N1b | M0 | IVA | Structural Incomplete | Yes | 50+ |

364 rows × 18 columns

##################################

# Performing a general exploration of the categorical variable levels

# of the ordinally binned predictor

##################################

print("Column: Age_Group")

print("Absolute Frequencies:")

print(thyroid_cancer_baseline['Age_Group'].value_counts().reindex(thyroid_cancer_baseline['Age_Group'].cat.categories))

print("\nNormalized Frequencies:")

print(thyroid_cancer_baseline['Age_Group'].value_counts(normalize=True).reindex(thyroid_cancer_baseline['Age_Group'].cat.categories))

Column: Age_Group Absolute Frequencies: <50 258 50+ 106 Name: count, dtype: int64 Normalized Frequencies: <50 0.708791 50+ 0.291209 Name: proportion, dtype: float64

##################################

# Preparing the working dataset

# by excluding columns that are

# irrelevant and had data quality issues

##################################

exclude_cols_irrelevant_dataquality = ['Age', 'Recurred', 'Hx_Smoking', 'Hx_Radiotherapy', 'M']

thyroid_cancer_baseline_filtered = thyroid_cancer_baseline.drop(columns=exclude_cols_irrelevant_dataquality)

display(thyroid_cancer_baseline_filtered)

| Gender | Smoking | Thyroid_Function | Physical_Examination | Adenopathy | Pathology | Focality | Risk | T | N | Stage | Response | Age_Group | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | F | No | Euthyroid | Single nodular goiter-left | No | Micropapillary | Uni-Focal | Low | T1a | N0 | I | Indeterminate | <50 |

| 1 | F | No | Euthyroid | Multinodular goiter | No | Micropapillary | Uni-Focal | Low | T1a | N0 | I | Excellent | <50 |

| 2 | F | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | I | Excellent | <50 |

| 3 | F | No | Euthyroid | Single nodular goiter-right | No | Micropapillary | Uni-Focal | Low | T1a | N0 | I | Excellent | 50+ |

| 4 | F | No | Euthyroid | Multinodular goiter | No | Micropapillary | Multi-Focal | Low | T1a | N0 | I | Excellent | 50+ |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 378 | M | Yes | Euthyroid | Single nodular goiter-right | Right | Papillary | Uni-Focal | High | T4b | N1b | IVB | Biochemical Incomplete | 50+ |

| 379 | M | Yes | Euthyroid | Multinodular goiter | Extensive | Papillary | Multi-Focal | High | T4b | N1b | IVB | Structural Incomplete | 50+ |

| 380 | M | Yes | Euthyroid | Multinodular goiter | Bilateral | Papillary | Multi-Focal | High | T4b | N1b | IVB | Structural Incomplete | 50+ |

| 381 | M | Yes | Clinical Hyperthyroidism | Multinodular goiter | Extensive | Hurthle Cell | Multi-Focal | High | T4b | N1b | IVA | Structural Incomplete | 50+ |

| 382 | M | Yes | Euthyroid | Multinodular goiter | Bilateral | Papillary | Multi-Focal | High | T4b | N1b | IVA | Structural Incomplete | 50+ |

364 rows × 13 columns

1.4.2 Category Aggregration and Encoding ¶

- 9 categorical predictors were observed with categories consisting of too few cases exhibiting high cardinality:

- Thyroid_Function:

- 313 Thyroid_Function=Euthyroid: 85.98%

- 14 Thyroid_Function=Subclinical Hypothyroidism: 3.86%

- 5 Thyroid_Function=Subclinical Hyperthyroidism: 1.37%

- 12 Thyroid_Function=Clinical Hypothyroidism: 3.29%

- 20 Thyroid_Function=Clinical Hyperthyroidism: 5.49%

- Physical_Examination:

- 7 Physical_Examination=Normal: 1.92%

- 88 Physical_Examination=Single nodular goiter-left: 24.17%

- 127 Physical_Examination=Single nodular goiter-right: 34.89%

- 135 Physical_Examination=Multinodular goiter: 37.09%

- 7 Physical_Examination=Diffuse goiter: 1.92%

- Adenopathy:

- 258 Adenopathy=No: 70.87%

- 17 Adenopathy=Left: 4.67%

- 48 Adenopathy=Right: 13.19%

- 32 Adenopathy=Bilateral: 8.79%

- 2 Adenopathy=Posterior: 5.49%

- 7 Adenopathy=Extensive: 1.92%

- Pathology:

- 20 Pathology=Hurthle Cell: 5.49%

- 28 Pathology=Follicular: 7.69%

- 45 Pathology=Micropapillary: 12.36%

- 271 Pathology=Papillary: 74.45%

- Risk:

- 230 Risk=Low: 63.18%

- 102 Risk=Intermediate: 28.02%

- 32 Risk=High: 8.79%

- T:

- 46 T=T1a: 12.63%

- 40 T=T1b: 10.98%

- 138 T=T2: 37.91%

- 96 T=T3a: 26.37%

- 16 T=T3b: 4.39%

- 20 T=T4a: 5.49%

- 8 T=T4b: 2.19%

- N:

- 249 N=N0: 68.41%

- 22 N=N1a: 6.04%

- 93 N=N1b: 25.54%

- Stage:

- 314 Stage=I: 86.26%

- 32 Stage=II: 8.79%

- 4 Stage=III: 1.09%

- 3 Stage=IVA: 0.82%

- 11 Stage=IVB: 3.02%

- Response:

- 189 Response=Excellent: 51.92%

- 91 Response=Structural Incomplete: 25.00%

- 23 Response=Biochemical Incomplete: 6.31%

- 61 Response=Indeterminate: 16.75%

- Thyroid_Function:

- Category aggregation was applied to certain categorical predictors observed with many levels containing only a few observations to improve data cardinality:

- Thyroid_Function:

- 313 Thyroid_Function=Euthyroid: 85.98%

- 51 Thyroid_Function=Hypothyroidism or Hyperthyroidism: 14.01%

- Physical_Examination:

- 142 Physical_Examination=Normal or Single Nodular Goiter : 39.01%

- 222 Physical_Examination=Multinodular or Diffuse Goiter: 60.98%

- Adenopathy:

- 258 Adenopathy=No: 70.87%

- 106 Adenopathy=Yes: 29.12%

- Pathology:

- 48 Pathology=Non-Papillary : 13.18%

- 316 Pathology=Papillary: 86.81%

- Risk:

- 134 Risk=Low: 36.81%

- 230 Risk=Intermediate to High: 63.18%

- T:

- 224 T=T1 to T2: 61.53%

- 140 T=T3 to T4b: 38.46%

- N:

- 249 N=N0: 68.41%

- 115 N=N1: 31.59%

- Stage:

- 314 Stage=I: 86.26%

- 50 Stage=II to IVB: 13.73%

- Response:

- 189 Response=Excellent: 51.92%

- 175 Response=Indeterminate or Incomplete: 48.07%

- Thyroid_Function:

- To focus on potential outliers from factors specifically pertaining to the clinicopathological characteristics of patients, only 6 categorical predictors were chosen to be contextually valid for the upstream analysis:

- Gender:

- 313 Gender=M: 19.50%

- 51 Gender=F: 80.49%

- Thyroid_Function:

- 313 Thyroid_Function=Euthyroid: 85.98%

- 51 Thyroid_Function=Hypothyroidism or Hyperthyroidism: 14.01%

- Physical_Examination:

- 142 Physical_Examination=Normal or Single Nodular Goiter : 39.01%

- 222 Physical_Examination=Multinodular or Diffuse Goiter: 60.98%

- Adenopathy:

- 258 Adenopathy=No: 70.87%

- 106 Adenopathy=Yes: 29.12%

- Pathology:

- 48 Pathology=Non-Papillary : 13.18%

- 316 Pathology=Papillary: 86.81%

- Age_Group:

- 313 Age_Group=<50: 70.88%

- 51 Age_Group=50+: 29.12%

- Gender:

##################################

# Performing a general exploration of the categorical variable levels

# based on the ordered categories

# before category aggregation

##################################

ordered_cat_cols = thyroid_cancer_baseline_filtered.select_dtypes(include=["category"]).columns

for col in ordered_cat_cols:

print(f"Column: {col}")

print("Absolute Frequencies:")

print(thyroid_cancer_baseline_filtered[col].value_counts().reindex(thyroid_cancer_baseline_filtered[col].cat.categories))

print("\nNormalized Frequencies:")

print(thyroid_cancer_baseline_filtered[col].value_counts(normalize=True).reindex(thyroid_cancer_baseline_filtered[col].cat.categories))

print("-" * 50)

Column: Gender Absolute Frequencies: M 71 F 293 Name: count, dtype: int64 Normalized Frequencies: M 0.195055 F 0.804945 Name: proportion, dtype: float64 -------------------------------------------------- Column: Smoking Absolute Frequencies: No 315 Yes 49 Name: count, dtype: int64 Normalized Frequencies: No 0.865385 Yes 0.134615 Name: proportion, dtype: float64 -------------------------------------------------- Column: Thyroid_Function Absolute Frequencies: Euthyroid 313 Subclinical Hypothyroidism 14 Subclinical Hyperthyroidism 5 Clinical Hypothyroidism 12 Clinical Hyperthyroidism 20 Name: count, dtype: int64 Normalized Frequencies: Euthyroid 0.859890 Subclinical Hypothyroidism 0.038462 Subclinical Hyperthyroidism 0.013736 Clinical Hypothyroidism 0.032967 Clinical Hyperthyroidism 0.054945 Name: proportion, dtype: float64 -------------------------------------------------- Column: Physical_Examination Absolute Frequencies: Normal 7 Single nodular goiter-left 88 Single nodular goiter-right 127 Multinodular goiter 135 Diffuse goiter 7 Name: count, dtype: int64 Normalized Frequencies: Normal 0.019231 Single nodular goiter-left 0.241758 Single nodular goiter-right 0.348901 Multinodular goiter 0.370879 Diffuse goiter 0.019231 Name: proportion, dtype: float64 -------------------------------------------------- Column: Adenopathy Absolute Frequencies: No 258 Left 17 Right 48 Bilateral 32 Posterior 2 Extensive 7 Name: count, dtype: int64 Normalized Frequencies: No 0.708791 Left 0.046703 Right 0.131868 Bilateral 0.087912 Posterior 0.005495 Extensive 0.019231 Name: proportion, dtype: float64 -------------------------------------------------- Column: Pathology Absolute Frequencies: Hurthle Cell 20 Follicular 28 Micropapillary 45 Papillary 271 Name: count, dtype: int64 Normalized Frequencies: Hurthle Cell 0.054945 Follicular 0.076923 Micropapillary 0.123626 Papillary 0.744505 Name: proportion, dtype: float64 -------------------------------------------------- Column: Focality Absolute Frequencies: Uni-Focal 228 Multi-Focal 136 Name: count, dtype: int64 Normalized Frequencies: Uni-Focal 0.626374 Multi-Focal 0.373626 Name: proportion, dtype: float64 -------------------------------------------------- Column: Risk Absolute Frequencies: Low 230 Intermediate 102 High 32 Name: count, dtype: int64 Normalized Frequencies: Low 0.631868 Intermediate 0.280220 High 0.087912 Name: proportion, dtype: float64 -------------------------------------------------- Column: T Absolute Frequencies: T1a 46 T1b 40 T2 138 T3a 96 T3b 16 T4a 20 T4b 8 Name: count, dtype: int64 Normalized Frequencies: T1a 0.126374 T1b 0.109890 T2 0.379121 T3a 0.263736 T3b 0.043956 T4a 0.054945 T4b 0.021978 Name: proportion, dtype: float64 -------------------------------------------------- Column: N Absolute Frequencies: N0 249 N1a 22 N1b 93 Name: count, dtype: int64 Normalized Frequencies: N0 0.684066 N1a 0.060440 N1b 0.255495 Name: proportion, dtype: float64 -------------------------------------------------- Column: Stage Absolute Frequencies: I 314 II 32 III 4 IVA 3 IVB 11 Name: count, dtype: int64 Normalized Frequencies: I 0.862637 II 0.087912 III 0.010989 IVA 0.008242 IVB 0.030220 Name: proportion, dtype: float64 -------------------------------------------------- Column: Response Absolute Frequencies: Excellent 189 Structural Incomplete 91 Biochemical Incomplete 23 Indeterminate 61 Name: count, dtype: int64 Normalized Frequencies: Excellent 0.519231 Structural Incomplete 0.250000 Biochemical Incomplete 0.063187 Indeterminate 0.167582 Name: proportion, dtype: float64 -------------------------------------------------- Column: Age_Group Absolute Frequencies: <50 258 50+ 106 Name: count, dtype: int64 Normalized Frequencies: <50 0.708791 50+ 0.291209 Name: proportion, dtype: float64 --------------------------------------------------

##################################

# Merging small categories into broader groups

# for certain categorical predictors

# to ensure sufficient representation in statistical models

# and prevent sparsity issues in cross-validation

##################################

thyroid_cancer_baseline_filtered['Thyroid_Function'] = thyroid_cancer_baseline_filtered['Thyroid_Function'].map(lambda x: 'Euthyroid' if (x in ['Euthyroid']) else 'Hypothyroidism or Hyperthyroidism').astype('category')

thyroid_cancer_baseline_filtered['Physical_Examination'] = thyroid_cancer_baseline_filtered['Physical_Examination'].map(lambda x: 'Normal or Single Nodular Goiter' if (x in ['Normal', 'Single nodular goiter-left', 'Single nodular goiter-right']) else 'Multinodular or Diffuse Goiter').astype('category')