Model Deployment : Detecting and Analyzing Machine Learning Model Drift Using Open-Source Monitoring Tools¶

- 1. Table of Contents

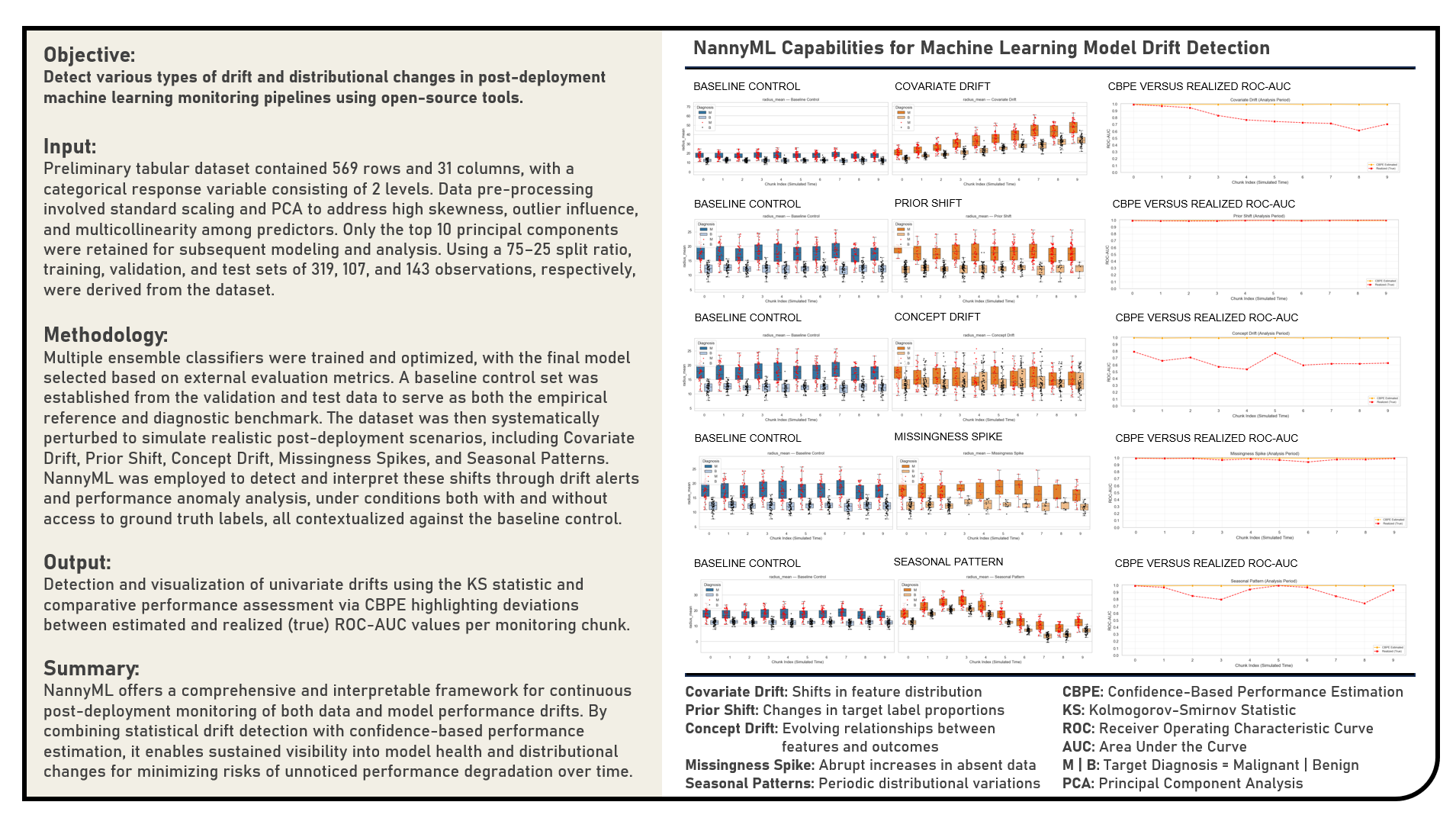

- 2. Summary

- 3. References

1. Table of Contents ¶

This project investigates open-source frameworks for post-deployment model monitoring and performance estimation, with a particular focus on NannyML in detecting and interpreting shifts in machine learning pipelines using Python. The objective was to systematically analyze how different types of drift and distributional changes manifest after model deployment, and to demonstrate how robust monitoring mitigates risks of performance degradation and biased decision-making. The workflow began with the development and selection of a baseline predictive model, which serves as a reference for stability. The dataset was then deliberately perturbed to simulate a range of realistic post-deployment scenarios: Covariate Drift (shifts in feature distributions), Prior Shift (changes in target label proportions), Concept Drift (evolving relationships between features and outcomes), Missingness Spikes (abrupt increases in absent data), and Seasonal Patterns (periodic variations in distributions). NannyML’s Kolmogorov–Smirnov (KS) Statistic and Confidence-Based Performance Estimation (CBPE) Method were subsequently applied to diagnose these shifts, evaluate their potential impact, and provide interpretable insights into model reliability. By contrasting baseline and perturbed conditions, the experiment demonstrated how continuous monitoring augments traditional offline evaluation, offering a safeguard against hidden risks. The findings highlighted how tools like NannyML can integrate seamlessly into MLOps workflows to enable proactive governance, early warning systems, and sustainable deployment practices. All results were consolidated in a Summary presented at the end of the document.

Post-Deployment Monitoring refers to the continuous oversight of machine learning models once they are integrated into production systems. Unlike offline evaluation, which relies on static validation datasets, monitoring addresses the challenges of evolving real-world data streams where underlying distributions may shift. Effective monitoring ensures that models remain accurate, unbiased, and aligned with business objectives. In MLOps, monitoring encompasses data integrity checks, drift detection, performance estimation, and alerting mechanisms. NannyML operationalizes this concept by focusing on performance estimation without ground truth, and by offering statistical methods to detect when data or predictions deviate from expected baselines. The challenges of post-deployment monitoring include delayed or missing ground truth labels, non-stationary data, hidden feedback loops, and difficulties distinguishing natural fluctuations from problematic drifts. Common solutions involve deploying drift detection algorithms, conducting regular audits of data pipelines, simulating counterfactuals, and retraining models on updated data. Monitoring frameworks must balance sensitivity (detecting real problems quickly) with robustness (avoiding false alarms caused by natural noise). Another key challenge is explainability: stakeholders need interpretable signals that justify interventions such as retraining or rolling back models. Tools like NannyML address these challenges through statistical tests for data drift, performance estimation without labels, missingness tracking, and visual diagnostics, making monitoring actionable for data scientists and business teams alike.

Baseline Control represents the stable reference state of a machine learning system against which all post-deployment data and model behavior are compared. It is typically generated using a clean, representative sample of pre-deployment data or early production data collected under known, reliable conditions. This dataset serves as the foundation for defining expected feature distributions, class priors, and performance benchmarks. In post-deployment monitoring, the Baseline Control is essential for distinguishing normal variability from problematic drift or degradation. Metrics such as feature stability, label proportions, and estimated performance consistency characterize its reliability. NannyML operationalizes Baseline Control by allowing users to designate a reference period, fit estimators such as CBPE (Confidence-Based Performance Estimation) on that data, and compute statistical boundaries or confidence intervals. Deviations in subsequent analysis periods, whether in feature distributions, prediction probabilities, or estimated performance, are then detected relative to this baseline. The Baseline Control thus functions as both an empirical anchor and a diagnostic standard, ensuring that drift alerts and performance anomalies are meaningfully contextualized against the model’s original operating state.

Covariate Drift occurs when the distribution of input features changes over time compared to the data used to train the model. Also known as data drift, it does not necessarily imply that the model’s predictive mapping is invalid, but it often precedes performance degradation. Detecting covariate drift requires comparing feature distributions between baseline (reference) data and incoming production data. NannyML provides multiple statistical tests and visualization tools to flag significant changes. Key signatures of covariate drift include shifts in summary statistics, changes in distributional shape, or increased divergence between reference and production feature distributions. These shifts may lead to poor generalization, as the model has not been exposed to the altered feature ranges. Detection techniques include univariate statistical tests (Kolmogorov–Smirnov, Chi-square), multivariate distance measures (Jensen–Shannon divergence, Population Stability Index), and density estimation methods. Remediation approaches involve domain adaptation, re-weighting training samples, or retraining models on updated data distributions. NannyML implements univariate and multivariate tests, provides drift magnitude quantification, and visualizes feature-level changes, allowing practitioners to pinpoint which features are most responsible for the detected drift.

Prior Shift arises when the distribution of the target variable changes, while the conditional relationship between features and labels remains stable. This is also referred to as label shift. Models trained on the original distribution may underperform because their predictions no longer match the new class priors. Detecting prior shifts is crucial, especially in imbalanced classification tasks where small changes in priors can lead to large performance impacts. Prior shift is typically characterized by systematic increases or decreases in class frequencies without corresponding changes in feature distributions. Its impact includes skewed decision thresholds, inflated false positives or false negatives, and degraded calibration of predicted probabilities. Detection approaches include monitoring predicted class proportions, estimating priors using EM-based algorithms, and re-weighting predictions to align with new distributions. Correction strategies may involve resampling, threshold adjustment, or cost-sensitive learning. NannyML assists by tracking predicted probability distributions and comparing them against reference priors, using techniques such as Jensen–Shannon divergence and Population Stability Index to quantify the magnitude of shift.

Concept Drift occurs when the underlying relationship between input features and target labels evolves over time. Unlike covariate drift, where features change independently, concept drift implies that the model’s mapping function itself becomes outdated. Concept drift is among the most damaging forms of drift because it directly undermines predictive accuracy. Detecting it often requires monitoring model outputs or inferred performance over time. NannyML addresses this by estimating performance even when ground truth labels are unavailable. Concept drift is typically signaled by a gradual or sudden decline in performance metrics, inconsistent error patterns, or misalignment between expected and actual prediction behavior. Its impact is severe: models may lose predictive power entirely if they cannot adapt. Detection methods include window-based performance monitoring, hypothesis testing, adaptive ensembles, and statistical monitoring of residuals. Corrective actions include periodic retraining, incremental learning, and online adaptation strategies. NannyML leverages Confidence-Based Performance Estimation (CBPE) and other statistical techniques to estimate performance degradation without labels, making it possible to detect concept drift in real-time production environments.

Missingness Spike refers to sudden increases in missing values within production data. Missing features can destabilize preprocessing pipelines, distort predictions, and signal upstream data collection failures. Monitoring missingness is critical for ensuring both model reliability and data pipeline health. NannyML provides built-in mechanisms to track and visualize changes in missing data patterns, alerting stakeholders before downstream impacts occur. Key indicators of missingness spikes include abrupt rises in null counts, missing categorical levels, or structural breaks in feature completeness. The consequences range from biased predictions to outright system failures if preprocessing pipelines cannot handle unexpected missingness. Detection methods include statistical monitoring of missing value proportions, anomaly detection on completeness metrics, and threshold-based alerts. Solutions typically involve robust imputation, pipeline hardening, and upstream data validation. NannyML offers automated missingness detection, completeness trend visualization, and configurable thresholds, ensuring that missingness issues are surfaced early.

Seasonal Pattern Shift represents periodic fluctuations in data distributions or outcomes that follow predictable cycles. If models are not trained with sufficient historical data to capture these patterns, their predictions may systematically underperform during certain periods. NannyML’s monitoring can reveal recurring deviations, helping teams distinguish between natural seasonality and genuine drift that requires retraining. Seasonality is often characterized by cyclic patterns in data features, prediction distributions, or performance metrics. Its impact includes systematic biases, recurring error peaks, and difficulty distinguishing drift from natural variability. Detection techniques include autocorrelation analysis, Fourier decomposition, and seasonal-trend decomposition. Mitigation strategies involve training with longer historical datasets, adding time-related features, or developing seasonally adaptive models. NannyML highlights recurring deviations in drift metrics, making it easier for practitioners to separate cyclical behavior from true degradation, ensuring that alerts are contextually relevant.

Performance Estimation Without Labels refers to scenarios in real-world deployments where the ground truth often arrives with delays or or may never be available. This makes direct performance tracking difficult. NannyML addresses this challenge by providing algorithms to estimate model performance without labels using confidence distributions, statistical inference, and robust estimation techniques. This capability allows practitioners to maintain visibility into model health continuously, even in label-scarce settings, bridging a critical gap in MLOps monitoring practices. Algorithms in this domain include Confidence-Based Performance Estimation (CBPE), which infers performance by comparing predicted probability distributions against expected confidence intervals, and Direct Loss Estimation, which approximates error rates based on calibration. Statistical inference techniques allow practitioners to construct confidence bounds around estimated metrics, while robust estimation mitigates the risk of spurious signals caused by small sample sizes or noisy predictions. NannyML provides implementations of CBPE and DLE, supporting metrics such as precision, recall, F1-score, and AUROC, all estimated without labels. This makes it possible to detect when a model is underperforming even before labels are collected, reducing blind spots in production monitoring.

Performance Estimation With Labels refers to the direct evaluation of model predictions against actual ground truth outcomes once labels are available. Unlike label-free methods, this approach allows for precise calculation of traditional performance metrics such as accuracy, precision, recall, F1-score, AUROC, and calibration error. Monitoring with labels provides the most reliable indication of model performance, enabling fine-grained diagnosis of errors and biases. The advantage of having labels is the ability to attribute errors to specific subgroups, detect fairness violations, and conduct targeted retraining. Challenges include label delay, annotation quality, and ensuring that labels accurately reflect the operational environment. Common approaches include sliding window evaluation, where performance is tracked over recent data batches, and benchmark comparison, where production metrics are compared to baseline test set results. NannyML incorporates labeled performance tracking alongside its label-free estimators, allowing users to validate estimates once ground truth becomes available. This dual capability ensures consistency, improves confidence in label-free methods, and provides a comprehensive framework for performance monitoring in both short-term and long-term horizons.

1.1. Data Background ¶

An open Breast Cancer Dataset from Kaggle (with all credits attributed to Wasiq Ali) was used for the analysis as consolidated from the following primary sources:

- Reference Repository entitled Differentiated breast Cancer Recurrence from UC Irvine Machine Learning Repository

- Research Paper entitled Nuclear Feature Extraction for Breast Tumor Diagnosis from the Electronic Imaging

This study hypothesized that the cell nuclei features derived from digitized images of fine needle aspirates (FNA) of breast masses influence breast cancer diagnoses between patients.

The dichotomous categorical variable for the study is:

- diagnosis - Status of the patient (M, Medical diagnosis of a cancerous breast tumor | B, Medical diagnosis of a non-cancerous breast tumor)

The predictor variables for the study are:

- radius_mean - Mean of the radius measurements (Mean of distances from center to points on the perimeter)

- texture_mean - Mean of the texture measurements (Standard deviation of grayscale values)

- perimeter_mean - Mean of the perimeter measurements

- area_mean - Mean of the area measurements

- smoothness_mean - Mean of the smoothness measurements (Local variation in radius lengths)

- compactness_mean - Mean of the compactness measurements (Perimeter² / area - 1.0)

- concavity_mean - Mean of the concavity measurements (Severity of concave portions of the contour)

- concave points_mean - Mean of the concave points measurements (Number of concave portions of the contour)

- symmetry_mean - Mean of the symmetry measurements

- fractal_dimension_mean - Mean of the fractal dimension measurements (Coastline approximation - 1)

- radius_se - Standard error of the radius measurements (Standard error of distances from center to points on the perimeter)

- texture_se - Standard error of the texture measurements (Standard deviation of grayscale values)

- perimeter_se - Standard error of the perimeter measurements

- area_se - Standard error of the area measurements

- smoothness_se - Standard error of the smoothness measurements (Local variation in radius lengths)

- compactness_se - Standard error of the compactness measurements (Perimeter² / area - 1.0)

- concavity_se - Standard error of the concavity measurements (Severity of concave portions of the contour)

- concave points_se - Standard error of the concave points measurements (Number of concave portions of the contour)

- symmetry_se - Standard error of the symmetry measurements

- fractal_dimension_se - Standard error of the fractal dimension measurements (Coastline approximation - 1)

- radius_worst - Largest value of the radius measurements (Largest value of distances from center to points on the perimeter)

- texture_worst - Largest value of the texture measurements (Standard deviation of grayscale values)

- perimeter_worst - Largest value of the perimeter measurements

- area_worst - Largest value of the area measurements

- smoothness_worst - Largest value of the smoothness measurements (Local variation in radius lengths)

- compactness_worst - Largest value of the compactness measurements (Perimeter² / area - 1.0)

- concavity_worst - Largest value of the concavity measurements (Severity of concave portions of the contour)

- concave points_worst - Largest value of the concave points measurements (Number of concave portions of the contour)

- symmetry_worst - Largest value of the symmetry measurements

- fractal_dimension_worst - Largest value of the fractal dimension measurements (Coastline approximation - 1)

1.2. Data Description ¶

- The initial tabular dataset was comprised of 569 observations and 32 variables (including 1 metadata, 1 target and 30 predictors).

- 569 rows (observations)

- 32 columns (variables)

- 1/32 metadata (categorical)

- id

- 1/32 target (categorical)

- diagnosis

- 30/32 predictor (numeric)

- radius_mean

- texture_mean

- perimeter_mean

- area_mean

- smoothness_mean

- compactness_mean

- concavity_mean

- concave points_mean

- symmetry_mean

- fractal_dimension_mean

- radius_se

- texture_se

- perimeter_se

- area_se

- smoothness_se

- compactness_se

- concavity_se

- concave points_se

- symmetry_se

- fractal_dimension_se

- radius_worst

- texture_worst

- perimeter_worst

- area_worst

- smoothness_worst

- compactness_worst

- concavity_worst

- concave points_worst

- symmetry_worst

- fractal_dimension_worst

- 1/32 metadata (categorical)

- The id variable was transformed to a row index for the data observations.

##################################

# Loading Python Libraries

##################################

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import os

import joblib

import re

import pickle

%matplotlib inline

import nannyml as nml

from nannyml.performance_estimation import CBPE

from nannyml.performance_calculation import PerformanceCalculator

from nannyml.chunk import DefaultChunker

import hashlib

import json

from urllib.parse import urlparse

import logging

from operator import truediv

from sklearn.preprocessing import StandardScaler, FunctionTransformer

from sklearn.decomposition import PCA

from scipy import stats

from scipy.stats import pointbiserialr, chi2_contingency

from sklearn.pipeline import Pipeline

from sklearn.compose import ColumnTransformer

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier, AdaBoostClassifier, GradientBoostingClassifier

from sklearn.impute import SimpleImputer

from xgboost import XGBClassifier

from lightgbm import LGBMClassifier

from catboost import CatBoostClassifier

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, roc_auc_score, confusion_matrix, classification_report

from sklearn.model_selection import train_test_split, ParameterGrid, StratifiedShuffleSplit, RepeatedStratifiedKFold, GridSearchCV

from sklearn.utils import resample

from sklearn.base import clone

import warnings

warnings.filterwarnings("ignore", message=".*force_all_finite.*")

warnings.filterwarnings("ignore", message="X does not have valid feature names")

##################################

# Defining file paths

##################################

DATASETS_ORIGINAL_PATH = r"datasets\original"

DATASETS_FINAL_PATH = r"datasets\final\complete"

DATASETS_FINAL_TRAIN_PATH = r"datasets\final\train"

DATASETS_FINAL_TRAIN_FEATURES_PATH = r"datasets\final\train\features"

DATASETS_FINAL_TRAIN_TARGET_PATH = r"datasets\final\train\target"

DATASETS_FINAL_VALIDATION_PATH = r"datasets\final\validation"

DATASETS_FINAL_VALIDATION_FEATURES_PATH = r"datasets\final\validation\features"

DATASETS_FINAL_VALIDATION_TARGET_PATH = r"datasets\final\validation\target"

DATASETS_FINAL_TEST_PATH = r"datasets\final\test"

DATASETS_FINAL_TEST_FEATURES_PATH = r"datasets\final\test\features"

DATASETS_FINAL_TEST_TARGET_PATH = r"datasets\final\test\target"

DATASETS_PREPROCESSED_PATH = r"datasets\preprocessed"

DATASETS_PREPROCESSED_TRAIN_PATH = r"datasets\preprocessed\train"

DATASETS_PREPROCESSED_TRAIN_FEATURES_PATH = r"datasets\preprocessed\train\features"

DATASETS_PREPROCESSED_TRAIN_TARGET_PATH = r"datasets\preprocessed\train\target"

DATASETS_PREPROCESSED_VALIDATION_PATH = r"datasets\preprocessed\validation"

DATASETS_PREPROCESSED_VALIDATION_FEATURES_PATH = r"datasets\preprocessed\validation\features"

DATASETS_PREPROCESSED_VALIDATION_TARGET_PATH = r"datasets\preprocessed\validation\target"

DATASETS_PREPROCESSED_TEST_PATH = r"datasets\preprocessed\test"

DATASETS_PREPROCESSED_TEST_FEATURES_PATH = r"datasets\preprocessed\test\features"

DATASETS_PREPROCESSED_TEST_TARGET_PATH = r"datasets\preprocessed\test\target"

MODELS_PATH = r"models"

##################################

# Loading the dataset

# from the DATASETS_ORIGINAL_PATH

##################################

breast_cancer = pd.read_csv(os.path.join("..", DATASETS_ORIGINAL_PATH, "Breast_Cancer_Dataset.csv"))

##################################

# Performing a general exploration of the dataset

##################################

print('Dataset Dimensions: ')

display(breast_cancer.shape)

Dataset Dimensions:

(569, 32)

##################################

# Listing the column names and data types

##################################

print('Column Names and Data Types:')

display(breast_cancer.dtypes)

Column Names and Data Types:

id int64 diagnosis object radius_mean float64 texture_mean float64 perimeter_mean float64 area_mean float64 smoothness_mean float64 compactness_mean float64 concavity_mean float64 concave points_mean float64 symmetry_mean float64 fractal_dimension_mean float64 radius_se float64 texture_se float64 perimeter_se float64 area_se float64 smoothness_se float64 compactness_se float64 concavity_se float64 concave points_se float64 symmetry_se float64 fractal_dimension_se float64 radius_worst float64 texture_worst float64 perimeter_worst float64 area_worst float64 smoothness_worst float64 compactness_worst float64 concavity_worst float64 concave points_worst float64 symmetry_worst float64 fractal_dimension_worst float64 dtype: object

##################################

# Setting the ID column as row names

##################################

breast_cancer = breast_cancer.set_index("id")

##################################

# Taking a snapshot of the dataset

##################################

breast_cancer.head()

| diagnosis | radius_mean | texture_mean | perimeter_mean | area_mean | smoothness_mean | compactness_mean | concavity_mean | concave points_mean | symmetry_mean | ... | radius_worst | texture_worst | perimeter_worst | area_worst | smoothness_worst | compactness_worst | concavity_worst | concave points_worst | symmetry_worst | fractal_dimension_worst | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||

| 842302 | M | 17.99 | 10.38 | 122.80 | 1001.0 | 0.11840 | 0.27760 | 0.3001 | 0.14710 | 0.2419 | ... | 25.38 | 17.33 | 184.60 | 2019.0 | 0.1622 | 0.6656 | 0.7119 | 0.2654 | 0.4601 | 0.11890 |

| 842517 | M | 20.57 | 17.77 | 132.90 | 1326.0 | 0.08474 | 0.07864 | 0.0869 | 0.07017 | 0.1812 | ... | 24.99 | 23.41 | 158.80 | 1956.0 | 0.1238 | 0.1866 | 0.2416 | 0.1860 | 0.2750 | 0.08902 |

| 84300903 | M | 19.69 | 21.25 | 130.00 | 1203.0 | 0.10960 | 0.15990 | 0.1974 | 0.12790 | 0.2069 | ... | 23.57 | 25.53 | 152.50 | 1709.0 | 0.1444 | 0.4245 | 0.4504 | 0.2430 | 0.3613 | 0.08758 |

| 84348301 | M | 11.42 | 20.38 | 77.58 | 386.1 | 0.14250 | 0.28390 | 0.2414 | 0.10520 | 0.2597 | ... | 14.91 | 26.50 | 98.87 | 567.7 | 0.2098 | 0.8663 | 0.6869 | 0.2575 | 0.6638 | 0.17300 |

| 84358402 | M | 20.29 | 14.34 | 135.10 | 1297.0 | 0.10030 | 0.13280 | 0.1980 | 0.10430 | 0.1809 | ... | 22.54 | 16.67 | 152.20 | 1575.0 | 0.1374 | 0.2050 | 0.4000 | 0.1625 | 0.2364 | 0.07678 |

5 rows × 31 columns

##################################

# Performing a general exploration of the numeric variables

##################################

print('Numeric Variable Summary:')

display(breast_cancer.describe(include='number').transpose())

Numeric Variable Summary:

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| radius_mean | 569.0 | 14.127292 | 3.524049 | 6.981000 | 11.700000 | 13.370000 | 15.780000 | 28.11000 |

| texture_mean | 569.0 | 19.289649 | 4.301036 | 9.710000 | 16.170000 | 18.840000 | 21.800000 | 39.28000 |

| perimeter_mean | 569.0 | 91.969033 | 24.298981 | 43.790000 | 75.170000 | 86.240000 | 104.100000 | 188.50000 |

| area_mean | 569.0 | 654.889104 | 351.914129 | 143.500000 | 420.300000 | 551.100000 | 782.700000 | 2501.00000 |

| smoothness_mean | 569.0 | 0.096360 | 0.014064 | 0.052630 | 0.086370 | 0.095870 | 0.105300 | 0.16340 |

| compactness_mean | 569.0 | 0.104341 | 0.052813 | 0.019380 | 0.064920 | 0.092630 | 0.130400 | 0.34540 |

| concavity_mean | 569.0 | 0.088799 | 0.079720 | 0.000000 | 0.029560 | 0.061540 | 0.130700 | 0.42680 |

| concave points_mean | 569.0 | 0.048919 | 0.038803 | 0.000000 | 0.020310 | 0.033500 | 0.074000 | 0.20120 |

| symmetry_mean | 569.0 | 0.181162 | 0.027414 | 0.106000 | 0.161900 | 0.179200 | 0.195700 | 0.30400 |

| fractal_dimension_mean | 569.0 | 0.062798 | 0.007060 | 0.049960 | 0.057700 | 0.061540 | 0.066120 | 0.09744 |

| radius_se | 569.0 | 0.405172 | 0.277313 | 0.111500 | 0.232400 | 0.324200 | 0.478900 | 2.87300 |

| texture_se | 569.0 | 1.216853 | 0.551648 | 0.360200 | 0.833900 | 1.108000 | 1.474000 | 4.88500 |

| perimeter_se | 569.0 | 2.866059 | 2.021855 | 0.757000 | 1.606000 | 2.287000 | 3.357000 | 21.98000 |

| area_se | 569.0 | 40.337079 | 45.491006 | 6.802000 | 17.850000 | 24.530000 | 45.190000 | 542.20000 |

| smoothness_se | 569.0 | 0.007041 | 0.003003 | 0.001713 | 0.005169 | 0.006380 | 0.008146 | 0.03113 |

| compactness_se | 569.0 | 0.025478 | 0.017908 | 0.002252 | 0.013080 | 0.020450 | 0.032450 | 0.13540 |

| concavity_se | 569.0 | 0.031894 | 0.030186 | 0.000000 | 0.015090 | 0.025890 | 0.042050 | 0.39600 |

| concave points_se | 569.0 | 0.011796 | 0.006170 | 0.000000 | 0.007638 | 0.010930 | 0.014710 | 0.05279 |

| symmetry_se | 569.0 | 0.020542 | 0.008266 | 0.007882 | 0.015160 | 0.018730 | 0.023480 | 0.07895 |

| fractal_dimension_se | 569.0 | 0.003795 | 0.002646 | 0.000895 | 0.002248 | 0.003187 | 0.004558 | 0.02984 |

| radius_worst | 569.0 | 16.269190 | 4.833242 | 7.930000 | 13.010000 | 14.970000 | 18.790000 | 36.04000 |

| texture_worst | 569.0 | 25.677223 | 6.146258 | 12.020000 | 21.080000 | 25.410000 | 29.720000 | 49.54000 |

| perimeter_worst | 569.0 | 107.261213 | 33.602542 | 50.410000 | 84.110000 | 97.660000 | 125.400000 | 251.20000 |

| area_worst | 569.0 | 880.583128 | 569.356993 | 185.200000 | 515.300000 | 686.500000 | 1084.000000 | 4254.00000 |

| smoothness_worst | 569.0 | 0.132369 | 0.022832 | 0.071170 | 0.116600 | 0.131300 | 0.146000 | 0.22260 |

| compactness_worst | 569.0 | 0.254265 | 0.157336 | 0.027290 | 0.147200 | 0.211900 | 0.339100 | 1.05800 |

| concavity_worst | 569.0 | 0.272188 | 0.208624 | 0.000000 | 0.114500 | 0.226700 | 0.382900 | 1.25200 |

| concave points_worst | 569.0 | 0.114606 | 0.065732 | 0.000000 | 0.064930 | 0.099930 | 0.161400 | 0.29100 |

| symmetry_worst | 569.0 | 0.290076 | 0.061867 | 0.156500 | 0.250400 | 0.282200 | 0.317900 | 0.66380 |

| fractal_dimension_worst | 569.0 | 0.083946 | 0.018061 | 0.055040 | 0.071460 | 0.080040 | 0.092080 | 0.20750 |

1.3. Data Quality Assessment ¶

Data quality findings based on assessment are as follows:

- No duplicated rows were noted.

- No missing data noted for any variable with Null.Count>0 and Fill.Rate<1.0.

- No low variance observed for any variable with First.Second.Mode.Ratio>5.

- No low variance observed for any variable with Unique.Count.Ratio>10.

- High skewness observed for 5 variables with Skewness>3 or Skewness<(-3).

- area_se: Skewness = 5.447

- concavity_se: Skewness = 5.110

- fractal_dimension_se: Skewness = 3.923

- perimeter_se: Skewness = 3.443

- radius_se: Skewness = 3.088

##################################

# Counting the number of duplicated rows

##################################

breast_cancer.duplicated().sum()

np.int64(0)

##################################

# Gathering the data types for each column

##################################

data_type_list = list(breast_cancer.dtypes)

##################################

# Gathering the variable names for each column

##################################

variable_name_list = list(breast_cancer.columns)

##################################

# Gathering the number of observations for each column

##################################

row_count_list = list([len(breast_cancer)] * len(breast_cancer.columns))

##################################

# Gathering the number of missing data for each column

##################################

null_count_list = list(breast_cancer.isna().sum(axis=0))

##################################

# Gathering the number of non-missing data for each column

##################################

non_null_count_list = list(breast_cancer.count())

##################################

# Gathering the missing data percentage for each column

##################################

fill_rate_list = map(truediv, non_null_count_list, row_count_list)

##################################

# Formulating the summary

# for all columns

##################################

all_column_quality_summary = pd.DataFrame(zip(variable_name_list,

data_type_list,

row_count_list,

non_null_count_list,

null_count_list,

fill_rate_list),

columns=['Column.Name',

'Column.Type',

'Row.Count',

'Non.Null.Count',

'Null.Count',

'Fill.Rate'])

display(all_column_quality_summary)

| Column.Name | Column.Type | Row.Count | Non.Null.Count | Null.Count | Fill.Rate | |

|---|---|---|---|---|---|---|

| 0 | diagnosis | object | 569 | 569 | 0 | 1.0 |

| 1 | radius_mean | float64 | 569 | 569 | 0 | 1.0 |

| 2 | texture_mean | float64 | 569 | 569 | 0 | 1.0 |

| 3 | perimeter_mean | float64 | 569 | 569 | 0 | 1.0 |

| 4 | area_mean | float64 | 569 | 569 | 0 | 1.0 |

| 5 | smoothness_mean | float64 | 569 | 569 | 0 | 1.0 |

| 6 | compactness_mean | float64 | 569 | 569 | 0 | 1.0 |

| 7 | concavity_mean | float64 | 569 | 569 | 0 | 1.0 |

| 8 | concave points_mean | float64 | 569 | 569 | 0 | 1.0 |

| 9 | symmetry_mean | float64 | 569 | 569 | 0 | 1.0 |

| 10 | fractal_dimension_mean | float64 | 569 | 569 | 0 | 1.0 |

| 11 | radius_se | float64 | 569 | 569 | 0 | 1.0 |

| 12 | texture_se | float64 | 569 | 569 | 0 | 1.0 |

| 13 | perimeter_se | float64 | 569 | 569 | 0 | 1.0 |

| 14 | area_se | float64 | 569 | 569 | 0 | 1.0 |

| 15 | smoothness_se | float64 | 569 | 569 | 0 | 1.0 |

| 16 | compactness_se | float64 | 569 | 569 | 0 | 1.0 |

| 17 | concavity_se | float64 | 569 | 569 | 0 | 1.0 |

| 18 | concave points_se | float64 | 569 | 569 | 0 | 1.0 |

| 19 | symmetry_se | float64 | 569 | 569 | 0 | 1.0 |

| 20 | fractal_dimension_se | float64 | 569 | 569 | 0 | 1.0 |

| 21 | radius_worst | float64 | 569 | 569 | 0 | 1.0 |

| 22 | texture_worst | float64 | 569 | 569 | 0 | 1.0 |

| 23 | perimeter_worst | float64 | 569 | 569 | 0 | 1.0 |

| 24 | area_worst | float64 | 569 | 569 | 0 | 1.0 |

| 25 | smoothness_worst | float64 | 569 | 569 | 0 | 1.0 |

| 26 | compactness_worst | float64 | 569 | 569 | 0 | 1.0 |

| 27 | concavity_worst | float64 | 569 | 569 | 0 | 1.0 |

| 28 | concave points_worst | float64 | 569 | 569 | 0 | 1.0 |

| 29 | symmetry_worst | float64 | 569 | 569 | 0 | 1.0 |

| 30 | fractal_dimension_worst | float64 | 569 | 569 | 0 | 1.0 |

##################################

# Counting the number of columns

# with Fill.Rate < 1.00

##################################

len(all_column_quality_summary[(all_column_quality_summary['Fill.Rate']<1)])

0

##################################

# Identifying the rows

# with Fill.Rate < 0.90

##################################

column_low_fill_rate = all_column_quality_summary[(all_column_quality_summary['Fill.Rate']<0.90)]

##################################

# Gathering the indices for each observation

##################################

row_index_list = breast_cancer.index

##################################

# Gathering the number of columns for each observation

##################################

column_count_list = list([len(breast_cancer.columns)] * len(breast_cancer))

##################################

# Gathering the number of missing data for each row

##################################

null_row_list = list(breast_cancer.isna().sum(axis=1))

##################################

# Gathering the missing data percentage for each column

##################################

missing_rate_list = map(truediv, null_row_list, column_count_list)

##################################

# Identifying the rows

# with missing data

##################################

all_row_quality_summary = pd.DataFrame(zip(row_index_list,

column_count_list,

null_row_list,

missing_rate_list),

columns=['Row.Name',

'Column.Count',

'Null.Count',

'Missing.Rate'])

display(all_row_quality_summary)

| Row.Name | Column.Count | Null.Count | Missing.Rate | |

|---|---|---|---|---|

| 0 | 842302 | 31 | 0 | 0.0 |

| 1 | 842517 | 31 | 0 | 0.0 |

| 2 | 84300903 | 31 | 0 | 0.0 |

| 3 | 84348301 | 31 | 0 | 0.0 |

| 4 | 84358402 | 31 | 0 | 0.0 |

| ... | ... | ... | ... | ... |

| 564 | 926424 | 31 | 0 | 0.0 |

| 565 | 926682 | 31 | 0 | 0.0 |

| 566 | 926954 | 31 | 0 | 0.0 |

| 567 | 927241 | 31 | 0 | 0.0 |

| 568 | 92751 | 31 | 0 | 0.0 |

569 rows × 4 columns

##################################

# Counting the number of rows

# with Missing.Rate > 0.00

##################################

len(all_row_quality_summary[(all_row_quality_summary['Missing.Rate']>0.00)])

0

##################################

# Formulating the dataset

# with numeric columns only

##################################

breast_cancer_numeric = breast_cancer.select_dtypes(include='number')

##################################

# Gathering the variable names for each numeric column

##################################

numeric_variable_name_list = breast_cancer_numeric.columns

##################################

# Gathering the minimum value for each numeric column

##################################

numeric_minimum_list = breast_cancer_numeric.min()

##################################

# Gathering the mean value for each numeric column

##################################

numeric_mean_list = breast_cancer_numeric.mean()

##################################

# Gathering the median value for each numeric column

##################################

numeric_median_list = breast_cancer_numeric.median()

##################################

# Gathering the maximum value for each numeric column

##################################

numeric_maximum_list = breast_cancer_numeric.max()

##################################

# Gathering the first mode values for each numeric column

##################################

numeric_first_mode_list = [breast_cancer[x].value_counts(dropna=True).index.tolist()[0] for x in breast_cancer_numeric]

##################################

# Gathering the second mode values for each numeric column

##################################

numeric_second_mode_list = [breast_cancer[x].value_counts(dropna=True).index.tolist()[1] for x in breast_cancer_numeric]

##################################

# Gathering the count of first mode values for each numeric column

##################################

numeric_first_mode_count_list = [breast_cancer_numeric[x].isin([breast_cancer[x].value_counts(dropna=True).index.tolist()[0]]).sum() for x in breast_cancer_numeric]

##################################

# Gathering the count of second mode values for each numeric column

##################################

numeric_second_mode_count_list = [breast_cancer_numeric[x].isin([breast_cancer[x].value_counts(dropna=True).index.tolist()[1]]).sum() for x in breast_cancer_numeric]

##################################

# Gathering the first mode to second mode ratio for each numeric column

##################################

numeric_first_second_mode_ratio_list = map(truediv, numeric_first_mode_count_list, numeric_second_mode_count_list)

##################################

# Gathering the count of unique values for each numeric column

##################################

numeric_unique_count_list = breast_cancer_numeric.nunique(dropna=True)

##################################

# Gathering the number of observations for each numeric column

##################################

numeric_row_count_list = list([len(breast_cancer_numeric)] * len(breast_cancer_numeric.columns))

##################################

# Gathering the unique to count ratio for each numeric column

##################################

numeric_unique_count_ratio_list = map(truediv, numeric_unique_count_list, numeric_row_count_list)

##################################

# Gathering the skewness value for each numeric column

##################################

numeric_skewness_list = breast_cancer_numeric.skew()

##################################

# Gathering the kurtosis value for each numeric column

##################################

numeric_kurtosis_list = breast_cancer_numeric.kurtosis()

##################################

# Generating a column quality summary for the numeric column

##################################

numeric_column_quality_summary = pd.DataFrame(zip(numeric_variable_name_list,

numeric_minimum_list,

numeric_mean_list,

numeric_median_list,

numeric_maximum_list,

numeric_first_mode_list,

numeric_second_mode_list,

numeric_first_mode_count_list,

numeric_second_mode_count_list,

numeric_first_second_mode_ratio_list,

numeric_unique_count_list,

numeric_row_count_list,

numeric_unique_count_ratio_list,

numeric_skewness_list,

numeric_kurtosis_list),

columns=['Numeric.Column.Name',

'Minimum',

'Mean',

'Median',

'Maximum',

'First.Mode',

'Second.Mode',

'First.Mode.Count',

'Second.Mode.Count',

'First.Second.Mode.Ratio',

'Unique.Count',

'Row.Count',

'Unique.Count.Ratio',

'Skewness',

'Kurtosis'])

display(numeric_column_quality_summary)

| Numeric.Column.Name | Minimum | Mean | Median | Maximum | First.Mode | Second.Mode | First.Mode.Count | Second.Mode.Count | First.Second.Mode.Ratio | Unique.Count | Row.Count | Unique.Count.Ratio | Skewness | Kurtosis | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | radius_mean | 6.981000 | 14.127292 | 13.370000 | 28.11000 | 12.340000 | 11.060000 | 4 | 3 | 1.333333 | 456 | 569 | 0.801406 | 0.942380 | 0.845522 |

| 1 | texture_mean | 9.710000 | 19.289649 | 18.840000 | 39.28000 | 16.840000 | 19.830000 | 3 | 3 | 1.000000 | 479 | 569 | 0.841828 | 0.650450 | 0.758319 |

| 2 | perimeter_mean | 43.790000 | 91.969033 | 86.240000 | 188.50000 | 82.610000 | 134.700000 | 3 | 3 | 1.000000 | 522 | 569 | 0.917399 | 0.990650 | 0.972214 |

| 3 | area_mean | 143.500000 | 654.889104 | 551.100000 | 2501.00000 | 512.200000 | 394.100000 | 3 | 2 | 1.500000 | 539 | 569 | 0.947276 | 1.645732 | 3.652303 |

| 4 | smoothness_mean | 0.052630 | 0.096360 | 0.095870 | 0.16340 | 0.100700 | 0.105400 | 5 | 4 | 1.250000 | 474 | 569 | 0.833040 | 0.456324 | 0.855975 |

| 5 | compactness_mean | 0.019380 | 0.104341 | 0.092630 | 0.34540 | 0.114700 | 0.120600 | 3 | 3 | 1.000000 | 537 | 569 | 0.943761 | 1.190123 | 1.650130 |

| 6 | concavity_mean | 0.000000 | 0.088799 | 0.061540 | 0.42680 | 0.000000 | 0.120400 | 13 | 3 | 4.333333 | 537 | 569 | 0.943761 | 1.401180 | 1.998638 |

| 7 | concave points_mean | 0.000000 | 0.048919 | 0.033500 | 0.20120 | 0.000000 | 0.028640 | 13 | 3 | 4.333333 | 542 | 569 | 0.952548 | 1.171180 | 1.066556 |

| 8 | symmetry_mean | 0.106000 | 0.181162 | 0.179200 | 0.30400 | 0.176900 | 0.189300 | 4 | 4 | 1.000000 | 432 | 569 | 0.759227 | 0.725609 | 1.287933 |

| 9 | fractal_dimension_mean | 0.049960 | 0.062798 | 0.061540 | 0.09744 | 0.067820 | 0.061130 | 3 | 3 | 1.000000 | 499 | 569 | 0.876977 | 1.304489 | 3.005892 |

| 10 | radius_se | 0.111500 | 0.405172 | 0.324200 | 2.87300 | 0.286000 | 0.220400 | 3 | 3 | 1.000000 | 540 | 569 | 0.949033 | 3.088612 | 17.686726 |

| 11 | texture_se | 0.360200 | 1.216853 | 1.108000 | 4.88500 | 0.856100 | 1.350000 | 3 | 3 | 1.000000 | 519 | 569 | 0.912127 | 1.646444 | 5.349169 |

| 12 | perimeter_se | 0.757000 | 2.866059 | 2.287000 | 21.98000 | 1.778000 | 1.143000 | 4 | 2 | 2.000000 | 533 | 569 | 0.936731 | 3.443615 | 21.401905 |

| 13 | area_se | 6.802000 | 40.337079 | 24.530000 | 542.20000 | 16.970000 | 16.640000 | 3 | 3 | 1.000000 | 528 | 569 | 0.927944 | 5.447186 | 49.209077 |

| 14 | smoothness_se | 0.001713 | 0.007041 | 0.006380 | 0.03113 | 0.005910 | 0.006064 | 2 | 2 | 1.000000 | 547 | 569 | 0.961336 | 2.314450 | 10.469840 |

| 15 | compactness_se | 0.002252 | 0.025478 | 0.020450 | 0.13540 | 0.018120 | 0.011040 | 3 | 3 | 1.000000 | 541 | 569 | 0.950791 | 1.902221 | 5.106252 |

| 16 | concavity_se | 0.000000 | 0.031894 | 0.025890 | 0.39600 | 0.000000 | 0.021850 | 13 | 2 | 6.500000 | 533 | 569 | 0.936731 | 5.110463 | 48.861395 |

| 17 | concave points_se | 0.000000 | 0.011796 | 0.010930 | 0.05279 | 0.000000 | 0.011670 | 13 | 3 | 4.333333 | 507 | 569 | 0.891037 | 1.444678 | 5.126302 |

| 18 | symmetry_se | 0.007882 | 0.020542 | 0.018730 | 0.07895 | 0.013440 | 0.020450 | 4 | 3 | 1.333333 | 498 | 569 | 0.875220 | 2.195133 | 7.896130 |

| 19 | fractal_dimension_se | 0.000895 | 0.003795 | 0.003187 | 0.02984 | 0.002256 | 0.002205 | 2 | 2 | 1.000000 | 545 | 569 | 0.957821 | 3.923969 | 26.280847 |

| 20 | radius_worst | 7.930000 | 16.269190 | 14.970000 | 36.04000 | 12.360000 | 13.500000 | 5 | 4 | 1.250000 | 457 | 569 | 0.803163 | 1.103115 | 0.944090 |

| 21 | texture_worst | 12.020000 | 25.677223 | 25.410000 | 49.54000 | 17.700000 | 27.260000 | 3 | 3 | 1.000000 | 511 | 569 | 0.898067 | 0.498321 | 0.224302 |

| 22 | perimeter_worst | 50.410000 | 107.261213 | 97.660000 | 251.20000 | 117.700000 | 105.900000 | 3 | 3 | 1.000000 | 514 | 569 | 0.903339 | 1.128164 | 1.070150 |

| 23 | area_worst | 185.200000 | 880.583128 | 686.500000 | 4254.00000 | 698.800000 | 808.900000 | 2 | 2 | 1.000000 | 544 | 569 | 0.956063 | 1.859373 | 4.396395 |

| 24 | smoothness_worst | 0.071170 | 0.132369 | 0.131300 | 0.22260 | 0.140100 | 0.131200 | 4 | 4 | 1.000000 | 411 | 569 | 0.722320 | 0.415426 | 0.517825 |

| 25 | compactness_worst | 0.027290 | 0.254265 | 0.211900 | 1.05800 | 0.148600 | 0.341600 | 3 | 3 | 1.000000 | 529 | 569 | 0.929701 | 1.473555 | 3.039288 |

| 26 | concavity_worst | 0.000000 | 0.272188 | 0.226700 | 1.25200 | 0.000000 | 0.450400 | 13 | 3 | 4.333333 | 539 | 569 | 0.947276 | 1.150237 | 1.615253 |

| 27 | concave points_worst | 0.000000 | 0.114606 | 0.099930 | 0.29100 | 0.000000 | 0.110500 | 13 | 3 | 4.333333 | 492 | 569 | 0.864675 | 0.492616 | -0.535535 |

| 28 | symmetry_worst | 0.156500 | 0.290076 | 0.282200 | 0.66380 | 0.236900 | 0.310900 | 3 | 3 | 1.000000 | 500 | 569 | 0.878735 | 1.433928 | 4.444560 |

| 29 | fractal_dimension_worst | 0.055040 | 0.083946 | 0.080040 | 0.20750 | 0.074270 | 0.087010 | 3 | 2 | 1.500000 | 535 | 569 | 0.940246 | 1.662579 | 5.244611 |

##################################

# Counting the number of numeric columns

# with First.Second.Mode.Ratio > 5.00

##################################

len(numeric_column_quality_summary[(numeric_column_quality_summary['First.Second.Mode.Ratio']>10)])

0

##################################

# Counting the number of numeric columns

# with Unique.Count.Ratio > 10.00

##################################

len(numeric_column_quality_summary[(numeric_column_quality_summary['Unique.Count.Ratio']>10)])

0

#################################

# Counting the number of numeric columns

# with Skewness > 3.00 or Skewness < -3.00

##################################

len(numeric_column_quality_summary[(numeric_column_quality_summary['Skewness']>3) | (numeric_column_quality_summary['Skewness']<(-3))])

5

##################################

# Identifying the numerical columns

# with Skewness > 3.00 or Skewness < -3.00

##################################

display(numeric_column_quality_summary[(numeric_column_quality_summary['Skewness']>3) | (numeric_column_quality_summary['Skewness']<(-3))].sort_values(by=['Skewness'], ascending=False))

| Numeric.Column.Name | Minimum | Mean | Median | Maximum | First.Mode | Second.Mode | First.Mode.Count | Second.Mode.Count | First.Second.Mode.Ratio | Unique.Count | Row.Count | Unique.Count.Ratio | Skewness | Kurtosis | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 13 | area_se | 6.802000 | 40.337079 | 24.530000 | 542.20000 | 16.970000 | 16.640000 | 3 | 3 | 1.0 | 528 | 569 | 0.927944 | 5.447186 | 49.209077 |

| 16 | concavity_se | 0.000000 | 0.031894 | 0.025890 | 0.39600 | 0.000000 | 0.021850 | 13 | 2 | 6.5 | 533 | 569 | 0.936731 | 5.110463 | 48.861395 |

| 19 | fractal_dimension_se | 0.000895 | 0.003795 | 0.003187 | 0.02984 | 0.002256 | 0.002205 | 2 | 2 | 1.0 | 545 | 569 | 0.957821 | 3.923969 | 26.280847 |

| 12 | perimeter_se | 0.757000 | 2.866059 | 2.287000 | 21.98000 | 1.778000 | 1.143000 | 4 | 2 | 2.0 | 533 | 569 | 0.936731 | 3.443615 | 21.401905 |

| 10 | radius_se | 0.111500 | 0.405172 | 0.324200 | 2.87300 | 0.286000 | 0.220400 | 3 | 3 | 1.0 | 540 | 569 | 0.949033 | 3.088612 | 17.686726 |

##################################

# Formulating the dataset

# with categorical columns only

##################################

breast_cancer_categorical = breast_cancer.select_dtypes(include=['category','object'])

##################################

# Gathering the variable names for the categorical column

##################################

categorical_variable_name_list = breast_cancer_categorical.columns

##################################

# Gathering the first mode values for each categorical column

##################################

categorical_first_mode_list = [breast_cancer[x].value_counts().index.tolist()[0] for x in breast_cancer_categorical]

##################################

# Gathering the second mode values for each categorical column

##################################

categorical_second_mode_list = [breast_cancer[x].value_counts().index.tolist()[1] for x in breast_cancer_categorical]

##################################

# Gathering the count of first mode values for each categorical column

##################################

categorical_first_mode_count_list = [breast_cancer_categorical[x].isin([breast_cancer[x].value_counts(dropna=True).index.tolist()[0]]).sum() for x in breast_cancer_categorical]

##################################

# Gathering the count of second mode values for each categorical column

##################################

categorical_second_mode_count_list = [breast_cancer_categorical[x].isin([breast_cancer[x].value_counts(dropna=True).index.tolist()[1]]).sum() for x in breast_cancer_categorical]

##################################

# Gathering the first mode to second mode ratio for each categorical column

##################################

categorical_first_second_mode_ratio_list = map(truediv, categorical_first_mode_count_list, categorical_second_mode_count_list)

##################################

# Gathering the count of unique values for each categorical column

##################################

categorical_unique_count_list = breast_cancer_categorical.nunique(dropna=True)

##################################

# Gathering the number of observations for each categorical column

##################################

categorical_row_count_list = list([len(breast_cancer_categorical)] * len(breast_cancer_categorical.columns))

##################################

# Gathering the unique to count ratio for each categorical column

##################################

categorical_unique_count_ratio_list = map(truediv, categorical_unique_count_list, categorical_row_count_list)

##################################

# Generating a column quality summary for the categorical columns

##################################

categorical_column_quality_summary = pd.DataFrame(zip(categorical_variable_name_list,

categorical_first_mode_list,

categorical_second_mode_list,

categorical_first_mode_count_list,

categorical_second_mode_count_list,

categorical_first_second_mode_ratio_list,

categorical_unique_count_list,

categorical_row_count_list,

categorical_unique_count_ratio_list),

columns=['Categorical.Column.Name',

'First.Mode',

'Second.Mode',

'First.Mode.Count',

'Second.Mode.Count',

'First.Second.Mode.Ratio',

'Unique.Count',

'Row.Count',

'Unique.Count.Ratio'])

display(categorical_column_quality_summary)

| Categorical.Column.Name | First.Mode | Second.Mode | First.Mode.Count | Second.Mode.Count | First.Second.Mode.Ratio | Unique.Count | Row.Count | Unique.Count.Ratio | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | diagnosis | B | M | 357 | 212 | 1.683962 | 2 | 569 | 0.003515 |

##################################

# Counting the number of categorical columns

# with First.Second.Mode.Ratio > 5.00

##################################

len(categorical_column_quality_summary[(categorical_column_quality_summary['First.Second.Mode.Ratio']>5)])

0

##################################

# Counting the number of categorical columns

# with Unique.Count.Ratio > 10.00

##################################

len(categorical_column_quality_summary[(categorical_column_quality_summary['Unique.Count.Ratio']>10)])

0

1.4. Data Preprocessing ¶

1.4.1 Data Splitting¶

- The baseline dataset is comprised of:

- 569 rows (observations)

- 357 diagnosis=B: 62.74%

- 212 diagnosis=M: 37.26%

- 31 columns (variables)

- 1/31 target (categorical)

- diagnosis

- 30/31 predictor (numeric)

- radius_mean

- texture_mean

- perimeter_mean

- area_mean

- smoothness_mean

- compactness_mean

- concavity_mean

- concave points_mean

- symmetry_mean

- fractal_dimension_mean

- radius_se

- texture_se

- perimeter_se

- area_se

- smoothness_se

- compactness_se

- concavity_se

- concave points_se

- symmetry_se

- fractal_dimension_se

- radius_worst

- texture_worst

- perimeter_worst

- area_worst

- smoothness_worst

- compactness_worst

- concavity_worst

- concave points_worst

- symmetry_worst

- fractal_dimension_worst

- 1/31 target (categorical)

- 569 rows (observations)

- The baseline dataset was divided into three subsets using a fixed random seed:

- test data: 25% of the original data with class stratification applied

- train data (initial): 75% of the original data with class stratification applied

- train data (final): 75% of the train (initial) data with class stratification applied

- validation data: 25% of the train (initial) data with class stratification applied

- Models were developed from the train data (final). Using the same dataset, a subset of models with optimal hyperparameters were selected, based on cross-validation.

- Among candidate models with optimal hyperparameters, the final model was selected based on performance on the validation data.

- Performance of the selected final model (and other candidate models for post-model selection comparison) were evaluated using the test data.

- The train data (final) subset is comprised of:

- 319 rows (observations)

- 200 diagnosis=B: 62.69%

- 119 diagnosis=M: 37.30%

- 31 columns (variables)

- 319 rows (observations)

- The validation data subset is comprised of:

- 107 rows (observations)

- 67 diagnosis=B: 62.61%

- 40 diagnosis=M: 37.38%

- 31 columns (variables)

- 107 rows (observations)

- The test data subset is comprised of:

- 143 rows (observations)

- 90 diagnosis=B: 62.93%

- 53 diagnosis=M: 37.06%

- 31 columns (variables)

- 143 rows (observations)

##################################

# Creating a dataset copy

# of the original data

##################################

breast_cancer_baseline = breast_cancer.copy()

##################################

# Performing a general exploration

# of the baseline dataset

##################################

print('Final Dataset Dimensions: ')

display(breast_cancer_baseline.shape)

Final Dataset Dimensions:

(569, 31)

##################################

# Obtaining the distribution of

# of the target variable

##################################

print('Target Variable Breakdown: ')

breast_cancer_breakdown = breast_cancer_baseline.groupby('diagnosis', observed=True).size().reset_index(name='Count')

breast_cancer_breakdown['Percentage'] = (breast_cancer_breakdown['Count'] / len(breast_cancer_baseline)) * 100

display(breast_cancer_breakdown)

Target Variable Breakdown:

| diagnosis | Count | Percentage | |

|---|---|---|---|

| 0 | B | 357 | 62.741652 |

| 1 | M | 212 | 37.258348 |

##################################

# Formulating the train and test data

# from the final dataset

# by applying stratification and

# using a 75-25 ratio

##################################

breast_cancer_train_initial, breast_cancer_test = train_test_split(breast_cancer_baseline,

test_size=0.25,

stratify=breast_cancer_baseline['diagnosis'],

random_state=987654321)

##################################

# Performing a general exploration

# of the initial training dataset

##################################

X_train_initial = breast_cancer_train_initial.drop('diagnosis', axis = 1)

y_train_initial = breast_cancer_train_initial['diagnosis']

print('Initial Train Dataset Dimensions: ')

display(X_train_initial.shape)

display(y_train_initial.shape)

print('Initial Train Target Variable Breakdown: ')

display(y_train_initial.value_counts())

print('Initial Train Target Variable Proportion: ')

display(y_train_initial.value_counts(normalize = True))

Initial Train Dataset Dimensions:

(426, 30)

(426,)

Initial Train Target Variable Breakdown:

diagnosis B 267 M 159 Name: count, dtype: int64

Initial Train Target Variable Proportion:

diagnosis B 0.626761 M 0.373239 Name: proportion, dtype: float64

##################################

# Performing a general exploration

# of the test dataset

##################################

X_test = breast_cancer_test.drop('diagnosis', axis = 1)

y_test = breast_cancer_test['diagnosis']

print('Test Dataset Dimensions: ')

display(X_test.shape)

display(y_test.shape)

print('Test Target Variable Breakdown: ')

display(y_test.value_counts())

print('Test Target Variable Proportion: ')

display(y_test.value_counts(normalize = True))

Test Dataset Dimensions:

(143, 30)

(143,)

Test Target Variable Breakdown:

diagnosis B 90 M 53 Name: count, dtype: int64

Test Target Variable Proportion:

diagnosis B 0.629371 M 0.370629 Name: proportion, dtype: float64

##################################

# Formulating the train and validation data

# from the train dataset

# by applying stratification and

# using a 75-25 ratio

##################################

breast_cancer_train, breast_cancer_validation = train_test_split(breast_cancer_train_initial,

test_size=0.25,

stratify=breast_cancer_train_initial['diagnosis'],

random_state=987654321)

##################################

# Performing a general exploration

# of the final training dataset

##################################

X_train = breast_cancer_train.drop('diagnosis', axis = 1)

y_train = breast_cancer_train['diagnosis']

print('Final Train Dataset Dimensions: ')

display(X_train.shape)

display(y_train.shape)

print('Final Train Target Variable Breakdown: ')

display(y_train.value_counts())

print('Final Train Target Variable Proportion: ')

display(y_train.value_counts(normalize = True))

Final Train Dataset Dimensions:

(319, 30)

(319,)

Final Train Target Variable Breakdown:

diagnosis B 200 M 119 Name: count, dtype: int64

Final Train Target Variable Proportion:

diagnosis B 0.626959 M 0.373041 Name: proportion, dtype: float64

##################################

# Performing a general exploration

# of the validation dataset

##################################

X_validation = breast_cancer_validation.drop('diagnosis', axis = 1)

y_validation = breast_cancer_validation['diagnosis']

print('Validation Dataset Dimensions: ')

display(X_validation.shape)

display(y_validation.shape)

print('Validation Target Variable Breakdown: ')

display(y_validation.value_counts())

print('Validation Target Variable Proportion: ')

display(y_validation.value_counts(normalize = True))

Validation Dataset Dimensions:

(107, 30)

(107,)

Validation Target Variable Breakdown:

diagnosis B 67 M 40 Name: count, dtype: int64

Validation Target Variable Proportion:

diagnosis B 0.626168 M 0.373832 Name: proportion, dtype: float64

##################################

# Saving the training data

# to the DATASETS_FINAL_TRAIN_PATH

# and DATASETS_FINAL_TRAIN_FEATURES_PATH

# and DATASETS_FINAL_TRAIN_TARGET_PATH

##################################

breast_cancer_train.to_csv(os.path.join("..", DATASETS_FINAL_TRAIN_PATH, "breast_cancer_train.csv"), index=False)

X_train.to_csv(os.path.join("..", DATASETS_FINAL_TRAIN_FEATURES_PATH, "X_train.csv"), index=False)

y_train.to_csv(os.path.join("..", DATASETS_FINAL_TRAIN_TARGET_PATH, "y_train.csv"), index=False)

##################################

# Saving the validation data

# to the DATASETS_FINAL_VALIDATION_PATH

# and DATASETS_FINAL_VALIDATION_FEATURE_PATH

# and DATASETS_FINAL_VALIDATION_TARGET_PATH

##################################

breast_cancer_validation.to_csv(os.path.join("..", DATASETS_FINAL_VALIDATION_PATH, "breast_cancer_validation.csv"), index=False)

X_validation.to_csv(os.path.join("..", DATASETS_FINAL_VALIDATION_FEATURES_PATH, "X_validation.csv"), index=False)

y_validation.to_csv(os.path.join("..", DATASETS_FINAL_VALIDATION_TARGET_PATH, "y_validation.csv"), index=False)

##################################

# Saving the test data

# to the DATASETS_FINAL_TEST_PATH

# and DATASETS_FINAL_TEST_FEATURES_PATH

# and DATASETS_FINAL_TEST_TARGET_PATH

##################################

breast_cancer_test.to_csv(os.path.join("..", DATASETS_FINAL_TEST_PATH, "breast_cancer_test.csv"), index=False)

X_test.to_csv(os.path.join("..", DATASETS_FINAL_TEST_FEATURES_PATH, "X_test.csv"), index=False)

y_test.to_csv(os.path.join("..", DATASETS_FINAL_TEST_TARGET_PATH, "y_test.csv"), index=False)

1.4.2 Outlier and Distributional Shape Analysis¶

Outlier and distributional shape analysis findings based on assessment of the training data are as follows:

- High skewness observed for 5 variables with Skewness>3 or Skewness<(-3).

- area_se: Skewness = 6.562

- concavity_se: Skewness = 5.648

- fractal_dimension_se: Skewness = 4.280

- perimeter_se: Skewness = 4.136

- radius_se: Skewness = 3.775

- Relatively high number of outliers observed for 7 numeric variables with Outlier.Ratio>0.05.

- area_se: Outlier.Ratio = 0.110

- radius_se: Outlier.Ratio = 0.075

- perimeter_se: Outlier.Ratio = 0.075

- smoothness_se: Outlier.Ratio = 0.059

- compactness_se: Outlier.Ratio = 0.059

- fractal_dimension_se: Outlier.Ratio = 0.056

- symmetry_se: Outlier.Ratio = 0.050

##################################

# Formulating the training dataset

# with numeric columns only

##################################

breast_cancer_train_numeric = breast_cancer_train.select_dtypes(include='number')

##################################

# Gathering the variable names for each numeric column

##################################

numeric_variable_name_list = list(breast_cancer_train_numeric.columns)

##################################

# Gathering the skewness value for each numeric column

##################################

numeric_skewness_list = breast_cancer_train_numeric.skew()

##################################

# Computing the interquartile range

# for all columns

##################################

breast_cancer_train_numeric_q1 = breast_cancer_train_numeric.quantile(0.25)

breast_cancer_train_numeric_q3 = breast_cancer_train_numeric.quantile(0.75)

breast_cancer_train_numeric_iqr = breast_cancer_train_numeric_q3 - breast_cancer_train_numeric_q1

##################################

# Gathering the outlier count for each numeric column

# based on the interquartile range criterion

##################################

numeric_outlier_count_list = ((breast_cancer_train_numeric < (breast_cancer_train_numeric_q1 - 1.5 * breast_cancer_train_numeric_iqr)) | (breast_cancer_train_numeric > (breast_cancer_train_numeric_q3 + 1.5 * breast_cancer_train_numeric_iqr))).sum()

##################################

# Gathering the number of observations for each column

##################################

numeric_row_count_list = list([len(breast_cancer_train_numeric)] * len(breast_cancer_train_numeric.columns))

##################################

# Gathering the unique to count ratio for each categorical column

##################################

numeric_outlier_ratio_list = map(truediv, numeric_outlier_count_list, numeric_row_count_list)

##################################

# Formulating the outlier summary

# for all numeric columns

##################################

numeric_column_outlier_summary = pd.DataFrame(zip(numeric_variable_name_list,

numeric_skewness_list,

numeric_outlier_count_list,

numeric_row_count_list,

numeric_outlier_ratio_list),

columns=['Numeric.Column.Name',

'Skewness',

'Outlier.Count',

'Row.Count',

'Outlier.Ratio'])

display(numeric_column_outlier_summary)

| Numeric.Column.Name | Skewness | Outlier.Count | Row.Count | Outlier.Ratio | |

|---|---|---|---|---|---|

| 0 | radius_mean | 0.966211 | 6 | 319 | 0.018809 |

| 1 | texture_mean | 0.746964 | 4 | 319 | 0.012539 |

| 2 | perimeter_mean | 1.034320 | 6 | 319 | 0.018809 |

| 3 | area_mean | 1.819687 | 9 | 319 | 0.028213 |

| 4 | smoothness_mean | 0.166009 | 1 | 319 | 0.003135 |

| 5 | compactness_mean | 1.115958 | 6 | 319 | 0.018809 |

| 6 | concavity_mean | 1.412274 | 10 | 319 | 0.031348 |

| 7 | concave points_mean | 1.155582 | 11 | 319 | 0.034483 |

| 8 | symmetry_mean | 0.532891 | 7 | 319 | 0.021944 |

| 9 | fractal_dimension_mean | 1.054941 | 8 | 319 | 0.025078 |

| 10 | radius_se | 3.775498 | 24 | 319 | 0.075235 |

| 11 | texture_se | 1.464707 | 10 | 319 | 0.031348 |

| 12 | perimeter_se | 4.136225 | 24 | 319 | 0.075235 |

| 13 | area_se | 6.562034 | 35 | 319 | 0.109718 |

| 14 | smoothness_se | 1.313172 | 19 | 319 | 0.059561 |

| 15 | compactness_se | 1.701432 | 19 | 319 | 0.059561 |

| 16 | concavity_se | 5.648674 | 14 | 319 | 0.043887 |

| 17 | concave points_se | 1.592173 | 14 | 319 | 0.043887 |

| 18 | symmetry_se | 2.442436 | 16 | 319 | 0.050157 |

| 19 | fractal_dimension_se | 4.280973 | 18 | 319 | 0.056426 |

| 20 | radius_worst | 1.016127 | 3 | 319 | 0.009404 |

| 21 | texture_worst | 0.476084 | 2 | 319 | 0.006270 |

| 22 | perimeter_worst | 1.075965 | 5 | 319 | 0.015674 |

| 23 | area_worst | 1.892646 | 13 | 319 | 0.040752 |

| 24 | smoothness_worst | 0.237077 | 0 | 319 | 0.000000 |

| 25 | compactness_worst | 1.098476 | 6 | 319 | 0.018809 |

| 26 | concavity_worst | 1.067913 | 5 | 319 | 0.015674 |

| 27 | concave points_worst | 0.436446 | 0 | 319 | 0.000000 |

| 28 | symmetry_worst | 1.154060 | 10 | 319 | 0.031348 |

| 29 | fractal_dimension_worst | 1.001579 | 10 | 319 | 0.031348 |

##################################

# Identifying the numerical columns

# with Skewness > 3.00 or Skewness < -3.00

##################################

display(numeric_column_outlier_summary[(numeric_column_outlier_summary['Skewness']>3) | (numeric_column_outlier_summary['Skewness']<(-3))].sort_values(by=['Skewness'], ascending=False))

| Numeric.Column.Name | Skewness | Outlier.Count | Row.Count | Outlier.Ratio | |

|---|---|---|---|---|---|

| 13 | area_se | 6.562034 | 35 | 319 | 0.109718 |

| 16 | concavity_se | 5.648674 | 14 | 319 | 0.043887 |

| 19 | fractal_dimension_se | 4.280973 | 18 | 319 | 0.056426 |

| 12 | perimeter_se | 4.136225 | 24 | 319 | 0.075235 |

| 10 | radius_se | 3.775498 | 24 | 319 | 0.075235 |

##################################

# Identifying the numerical columns

# with Outlier.Ratio > 0.05

##################################

display(numeric_column_outlier_summary[numeric_column_outlier_summary['Outlier.Ratio']>0.05].sort_values(by=['Outlier.Ratio'], ascending=False))

| Numeric.Column.Name | Skewness | Outlier.Count | Row.Count | Outlier.Ratio | |

|---|---|---|---|---|---|

| 13 | area_se | 6.562034 | 35 | 319 | 0.109718 |

| 10 | radius_se | 3.775498 | 24 | 319 | 0.075235 |

| 12 | perimeter_se | 4.136225 | 24 | 319 | 0.075235 |

| 14 | smoothness_se | 1.313172 | 19 | 319 | 0.059561 |

| 15 | compactness_se | 1.701432 | 19 | 319 | 0.059561 |

| 19 | fractal_dimension_se | 4.280973 | 18 | 319 | 0.056426 |

| 18 | symmetry_se | 2.442436 | 16 | 319 | 0.050157 |

##################################

# Formulating the individual boxplots

# for all numeric columns

##################################

for column in breast_cancer_train_numeric:

plt.figure(figsize=(17,1))

sns.boxplot(data=breast_cancer_train_numeric, x=column)

plt.show()

plt.close()

1.4.3 Collinearity¶

Collinearity evaluation findings based on assessment of the training data are as follows:

- Predictors were predominantly positively correlated with 50% reporting correlation values ranging from 0.129 to 0.558.

- High Pearson.Correlation values > 0.90 were noted for 4.60% (20/435) of the pairwise combinations of predictors:

- radius_mean and perimeter_mean: Pearson.Correlation = 0.997

- radius_worst and perimeter_worst: Pearson.Correlation = 0.993

- perimeter_mean and area_mean: Pearson.Correlation = 0.985

- radius_mean and area_mean: Pearson.Correlation = 0.984

- radius_worst and area_worst: Pearson.Correlation = 0.982

- perimeter_worst and area_worst: Pearson.Correlation = 0.978

- perimeter_mean and perimeter_worst: Pearson.Correlation = 0.972

- perimeter_mean and radius_worst: Pearson.Correlation = 0.972

- radius_mean and radius_worst: Pearson.Correlation = 0.971

- radius_se and perimeter_se: Pearson.Correlation = 0.971

- radius_mean and perimeter_worst: Pearson.Correlation = 0.967

- area_mean and area_worst: Pearson.Correlation = 0.964

- area_mean and radius_worst: Pearson.Correlation = 0.958

- area_mean and perimeter_worst: Pearson.Correlation = 0.955

- perimeter_mean and area_worst: Pearson.Correlation = 0.951

- radius_se and area_se: Pearson.Correlation = 0.948

- radius_mean and area_worst: Pearson.Correlation = 0.948

- perimeter_se and area_se: Pearson.Correlation = 0.942

- texture_mean and texture_worst: Pearson.Correlation = 0.923

- concave points_mean and concave points_worst: Pearson.Correlation = 0.911

- concavity_mean and concave points_mean: Pearson.Correlation = 0.900

##################################

# Creating a dataset copy

# with only the predictors present

# for correlation analysis

##################################

breast_cancer_train_correlation = breast_cancer_train.drop(['diagnosis'], axis=1)

display(breast_cancer_train_correlation)

| radius_mean | texture_mean | perimeter_mean | area_mean | smoothness_mean | compactness_mean | concavity_mean | concave points_mean | symmetry_mean | fractal_dimension_mean | ... | radius_worst | texture_worst | perimeter_worst | area_worst | smoothness_worst | compactness_worst | concavity_worst | concave points_worst | symmetry_worst | fractal_dimension_worst | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||

| 868826 | 14.950 | 17.57 | 96.85 | 678.1 | 0.11670 | 0.13050 | 0.15390 | 0.08624 | 0.1957 | 0.06216 | ... | 18.55 | 21.43 | 121.40 | 971.4 | 0.1411 | 0.21640 | 0.33550 | 0.16670 | 0.3414 | 0.07147 |

| 8810703 | 28.110 | 18.47 | 188.50 | 2499.0 | 0.11420 | 0.15160 | 0.32010 | 0.15950 | 0.1648 | 0.05525 | ... | 28.11 | 18.47 | 188.50 | 2499.0 | 0.1142 | 0.15160 | 0.32010 | 0.15950 | 0.1648 | 0.05525 |

| 906878 | 13.660 | 19.13 | 89.46 | 575.3 | 0.09057 | 0.11470 | 0.09657 | 0.04812 | 0.1848 | 0.06181 | ... | 15.14 | 25.50 | 101.40 | 708.8 | 0.1147 | 0.31670 | 0.36600 | 0.14070 | 0.2744 | 0.08839 |

| 911654 | 14.200 | 20.53 | 92.41 | 618.4 | 0.08931 | 0.11080 | 0.05063 | 0.03058 | 0.1506 | 0.06009 | ... | 16.45 | 27.26 | 112.10 | 828.5 | 0.1153 | 0.34290 | 0.25120 | 0.13390 | 0.2534 | 0.07858 |

| 903483 | 8.734 | 16.84 | 55.27 | 234.3 | 0.10390 | 0.07428 | 0.00000 | 0.00000 | 0.1985 | 0.07098 | ... | 10.17 | 22.80 | 64.01 | 317.0 | 0.1460 | 0.13100 | 0.00000 | 0.00000 | 0.2445 | 0.08865 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 84862001 | 16.130 | 20.68 | 108.10 | 798.8 | 0.11700 | 0.20220 | 0.17220 | 0.10280 | 0.2164 | 0.07356 | ... | 20.96 | 31.48 | 136.80 | 1315.0 | 0.1789 | 0.42330 | 0.47840 | 0.20730 | 0.3706 | 0.11420 |

| 90317302 | 10.260 | 12.22 | 65.75 | 321.6 | 0.09996 | 0.07542 | 0.01923 | 0.01968 | 0.1800 | 0.06569 | ... | 11.38 | 15.65 | 73.23 | 394.5 | 0.1343 | 0.16500 | 0.08615 | 0.06696 | 0.2937 | 0.07722 |

| 86211 | 12.180 | 17.84 | 77.79 | 451.1 | 0.10450 | 0.07057 | 0.02490 | 0.02941 | 0.1900 | 0.06635 | ... | 12.83 | 20.92 | 82.14 | 495.2 | 0.1140 | 0.09358 | 0.04980 | 0.05882 | 0.2227 | 0.07376 |

| 926954 | 16.600 | 28.08 | 108.30 | 858.1 | 0.08455 | 0.10230 | 0.09251 | 0.05302 | 0.1590 | 0.05648 | ... | 18.98 | 34.12 | 126.70 | 1124.0 | 0.1139 | 0.30940 | 0.34030 | 0.14180 | 0.2218 | 0.07820 |

| 86208 | 20.260 | 23.03 | 132.40 | 1264.0 | 0.09078 | 0.13130 | 0.14650 | 0.08683 | 0.2095 | 0.05649 | ... | 24.22 | 31.59 | 156.10 | 1750.0 | 0.1190 | 0.35390 | 0.40980 | 0.15730 | 0.3689 | 0.08368 |

319 rows × 30 columns

##################################

# Initializing the correlation matrix

##################################

breast_cancer_train_correlation_matrix = pd.DataFrame(np.zeros((len(breast_cancer_train_correlation.columns), len(breast_cancer_train_correlation.columns))),

columns=breast_cancer_train_correlation.columns,

index=breast_cancer_train_correlation.columns)

##################################

# Calculating different types

# of correlation coefficients

# per variable type

##################################

for i in range(len(breast_cancer_train_correlation.columns)):

for j in range(i, len(breast_cancer_train_correlation.columns)):

if i == j:

breast_cancer_train_correlation_matrix.iloc[i, j] = 1.0

else:

col_i = breast_cancer_train_correlation.iloc[:, i]

col_j = breast_cancer_train_correlation.iloc[:, j]

# Detecting binary variables (assumes binary variables are coded as 0/1)

is_binary_i = col_i.nunique() == 2

is_binary_j = col_j.nunique() == 2

# Computing the Pearson correlation for two continuous variables

if col_i.dtype in ['int64', 'float64'] and col_j.dtype in ['int64', 'float64']:

corr = col_i.corr(col_j)

# Computing the Point-Biserial correlation for continuous and binary variables

elif (col_i.dtype in ['int64', 'float64'] and is_binary_j) or (col_j.dtype in ['int64', 'float64'] and is_binary_i):

continuous_var = col_i if col_i.dtype in ['int64', 'float64'] else col_j

binary_var = col_j if is_binary_j else col_i

# Convert binary variable to 0/1 (if not already)

binary_var = binary_var.astype('category').cat.codes

corr, _ = pointbiserialr(continuous_var, binary_var)

# Computing the Phi coefficient for two binary variables

elif is_binary_i and is_binary_j:

corr = col_i.corr(col_j)

# Computing the Cramér's V for two categorical variables (if more than 2 categories)

else:

contingency_table = pd.crosstab(col_i, col_j)

chi2, _, _, _ = chi2_contingency(contingency_table)

n = contingency_table.sum().sum()

phi2 = chi2 / n

r, k = contingency_table.shape

corr = np.sqrt(phi2 / min(k - 1, r - 1)) # Cramér's V formula

# Assigning correlation values to the matrix

breast_cancer_train_correlation_matrix.iloc[i, j] = corr

breast_cancer_train_correlation_matrix.iloc[j, i] = corr

# Displaying the correlation matrix

display(breast_cancer_train_correlation_matrix)

| radius_mean | texture_mean | perimeter_mean | area_mean | smoothness_mean | compactness_mean | concavity_mean | concave points_mean | symmetry_mean | fractal_dimension_mean | ... | radius_worst | texture_worst | perimeter_worst | area_worst | smoothness_worst | compactness_worst | concavity_worst | concave points_worst | symmetry_worst | fractal_dimension_worst | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| radius_mean | 1.000000 | 0.296754 | 0.997917 | 0.984916 | 0.145069 | 0.521699 | 0.653960 | 0.829568 | 0.178392 | -0.362551 | ... | 0.971938 | 0.289640 | 0.967088 | 0.948294 | 0.072870 | 0.428210 | 0.496566 | 0.737347 | 0.217375 | -0.038225 |

| texture_mean | 0.296754 | 1.000000 | 0.300384 | 0.293404 | -0.081253 | 0.194167 | 0.243735 | 0.250668 | 0.068573 | -0.098025 | ... | 0.319664 | 0.923246 | 0.322248 | 0.312728 | 0.004546 | 0.214524 | 0.207238 | 0.222253 | 0.068177 | 0.054058 |

| perimeter_mean | 0.997917 | 0.300384 | 1.000000 | 0.985186 | 0.180173 | 0.570667 | 0.691792 | 0.855888 | 0.209569 | -0.313834 | ... | 0.972461 | 0.293613 | 0.972875 | 0.951121 | 0.103165 | 0.468946 | 0.531674 | 0.762926 | 0.235097 | 0.006081 |

| area_mean | 0.984916 | 0.293404 | 0.985186 | 1.000000 | 0.155662 | 0.519067 | 0.673052 | 0.829811 | 0.177404 | -0.320518 | ... | 0.958283 | 0.275844 | 0.955452 | 0.964157 | 0.080662 | 0.406863 | 0.489149 | 0.712358 | 0.188049 | -0.033382 |

| smoothness_mean | 0.145069 | -0.081253 | 0.180173 | 0.155662 | 1.000000 | 0.618184 | 0.497254 | 0.538361 | 0.530327 | 0.518288 | ... | 0.197350 | -0.022554 | 0.220016 | 0.198870 | 0.792618 | 0.414057 | 0.397902 | 0.487924 | 0.337613 | 0.444924 |